From Big to Smart Data in 5 Simple Steps

Are you the same as me? Tired of hearing buzzwords such as “mining gold in your data”, “finding valuable nuggets of information” or the ever present “actionable insights”. One of them crops up in any one of the many vendor pitches you are surely bombarded with. The hype around Big Data has reached fever pitch levels. On the other hand we have statements such as the one from Dan Ariely “Big data is like teenage sex: everyone talks about it, nobody really knows how to do it, everyone thinks everyone else is doing it, so everyone claims they are doing it.” In summary some kids get Big Data and others (read most) don’t. It doesn’t have to be that way. Data analytics is not rocket science (at least most of it).

In this blog post we cut through the fog. We’ll have a look at what the real drivers behind the Big Data revolution are. More importantly I will show you how you can use data to reduce uncertainty in your decision making.

Big Data B4 Big Data

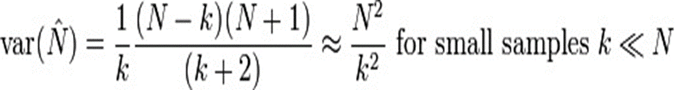

Have a guess what the following formula is.

No idea? I’ll help you. This is the formula that won the Allies World War II. Ok. It is a bit of an exaggeration but it certainly contributed to VE day. This formula predicts the monthly German tank production. Initially the Allies used intelligence officers on the ground to gather that information, which turned out to be wildly inaccurate. Then they came across something else. They noticed that the serial number of German tanks was a sequence number that contained the production year of the tank. By applying sampling techniques they were able to infer the monthly number of tanks produced. It turned out after the war that they were pretty accurate in their predictions.

As you can see, Smart Data applications existed before the age of Big Data. The analytics, the methodology, the statistics, and algorithms were all in place. The problem was the availability of the data. As you can imagine it is hard to collect the serial numbers of German tanks. In the past it generally took a lot of resources and effort just to collect the data.

Digitisation of Everything

Beginning in the late 1980ies, data became more and more readily available in 1s and 0s. It was the dawn of the era of the personal computer. Digitisation was upon us. The whole process accelerated further with the arrival of the internet and smart devices. At the turn of the last century, more than 90% of the world’s data was still in analogue format. This figure has been put on its head and we now more than 99% of the world’s data is digital. Data is growing at an exponential rate and this rate itself is accelerating. The arrival of the Internet of Things and machine generated data in general unleashes another tidal wave of data.

Why is the process of digitisation so important? Information in digital form is easier, cheaper, and quicker to store, process, and share. The high cost of acquiring and processing data (think of serial numbers of German tanks) has been significantly reduced. This is a great opportunity to base more and more of our decisions and actions on data. Interestingly, the phenomenon of Big Data in Germany is referred to as just digitisation.

Where is the catch? The mere availability of more data is no guarantee to derive value from data. For (big) data to become smart data and for your organisation to become data driven a few more ingredients are needed. This can be summarised in the Big Data formula: Big Data = Digitisation + An opportunity to compete on analytics. Emphasis is on opportunity.

Moore’s Law, Distributed Computing & Open Source

The exponential growth in data coincided with a couple of other relevant developments. Moore’s law of exponential growth in CPU processing power gives us the horse power to crunch through this data tsunami – at least for the time being (Interestingly, the data growth rate is greater than the growth rate in processing power; also Moore’s law itself will sooner or later hit the laws of physics).

Over the last 15 years we also saw tremendous advancements in distributed computing. Distributed computing allows us to process data in parallel on multiple machines or servers. It works particularly well for embarrassingly parallel problems. A lot of the global web companies first started out with traditional, non-distributed technologies such as MySQL at Facebook. All of these companies quickly hit the roof with these horizontal, hard to scale technologies and had to come up with their own solutions. Google popularised the MapReduce processing paradigm and the Google distributed file system through a series of white papers, which resulted in the creation of Hadoop and other distributed compute frameworks. A lot of these technologies were open sourced and are within reach of small and medium sized enterprises.

The result of all of these developments is that we now have smart data applications available that only at the turn of the last century would have been unimaginable. Just to give you a few examples. The DARPA in 2004 ran a competition for self-driving cars nicknamed the debacle in the desert as the race was over after only 6% of the course were completed . Today self-driving cars are a reality. Machine generated translations only a few years ago caused more confusion and embarrassment than adding any value for understanding a certain text. Using data (EU and UN translations in phase one) and text analytics, Google was able to significantly improve machine generated translations.

Data, Data Everywhere… But no insights in sight.

The great opportunity that the process of digitisation offers is also a great challenge.

“Big Data is Useless. It only gives you Answers.”

To paraphrase Picasso’s statement about computers being useless it also neatly summarizes Big Data. While a lot of companies are now competing on data analytics a many companies at the other end of the spectrum find it increasingly hard to deliver on the promises of Big Data. So why is it that Big Data only lives up to the hype for some companies?

We often come across expectations by executives that insights can be generated by the push of a button. Just feed enough data to the sausage machine and insights come out at the other end. However, implementing a Hadoop project without being embedded in a business context does not deliver any value. Contrary to a common belief, the data does not speak to us. We need to stop putting the cart before the horse. The starting point for a Smart Data project should never be the data. Always start with the problem and the question. Be strategic and put the business question into context. Don’t just dive into the data. Those who discovered the Titanic’s shipwreck had a plan. They did not search every corner of the Atlantic Ocean but were putting the problem into context of available information

This brings us to the end of the first part of our article on Smart Data. In the second part we will look at the various steps that you as an organisation and individual can turn data into smart data and reduce uncertainty and risk in your decision making.

If you want to become data driven, contact us for the Sonra Smart Data workshops.