Query Offload with Redshift Spectrum. Use Cases and Limitations

Table of Contents

Update: An earlier version of this article claimed that Spectrum runs Presto under the hood. This is incorrect. Spectrum uses its own query layer.

Query offload from relational data warehouses to cheaper distributed storage seems to be all the rage these days. In this blog post we examine what works and what the limitations are.

What is Query Offload?

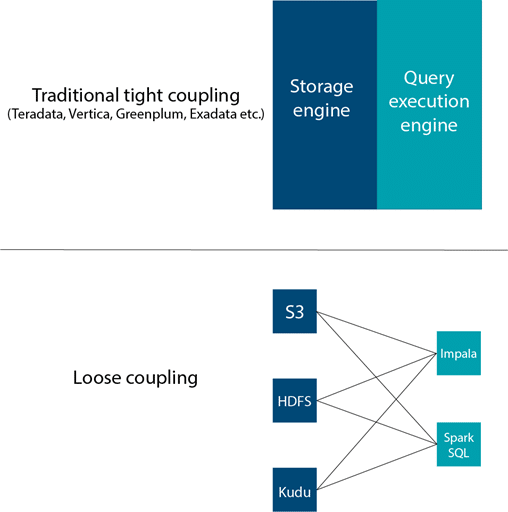

Let’s first define what we mean by query offload. With query offload we either move data from more expensive storage to cheaper storage or from a less scalable platform to a more scalable platform. We then use a query engine that can run federated queries across both storage engines. One core component of query offload is the idea of loosely coupling compute and storage. In the past the query engine and storage engine were packaged up in the same binaries. The query engine was only able to query this particular storage format. In a loosely coupled setup the query engine can be pointed to different types of storage engine and pull the data from there.

I go into a lot of detail on query offload in my course Big Data for data warehouse professionals. It’s highly recommended if you want to find out more on use cases and scenarios 🙂

Query Offload Examples

Let me give you a few examples for query offload.

We can offload large transaction tables from a data warehouse appliance such as Oracle Exadata to the Oracle Big Data Appliance (Cloudera Hadoop). We can then use Oracle Big Data SQL to query the data across both systems.

We can offload a large transaction table from an SMP (single server) such as SQL Server 2016 to Hadoop. We can use MS Polybase (ships with SQL Server 2016) to federate queries across both systems.

In this blog post we will look at how we can offload data from Amazon Redshift to S3 and use Redshift Spectrum. Similar to AWS Athena it allows us to federate data across both S3 and data stored in Redshift. Spectrum uses its own scale out query layer and is able to leverage the Redshift optimizer so it requires a Redshift cluster to access it. Spectrum runs Redshift queries as is, without modification. Based on some tests by Databricks the throughput on HDFS vs S3 is about 6 times bigger.

Query offload does not necessarily have to involve query federation. You can happily offload data from expensive/non-scalable engines to cheaper/scalable engines without the need to stitch the data together.

Query Offload Scenarios on Redshift Spectrum

We will go through various scenarios and for each scenario run a query. We will then inspect the explain plan to see what exactly is going on under the hood. From this information we can draw some conclusions on good use cases for query offload and query federation.

Test Setup

We have a small lookup table (dimension) that resides in Redshift and a large transaction table (fact) that resides in S3 storage. The large table contains a foreign key which references the small table. The small table is called ‘phoneBook’ and consists of phone numbers together with a category (work, friends, school, …).

| phone | category |

|---|---|

| 49606807222 | YOGA |

| 49600731720 | TAXI |

| 49533835656 | FRIENDS |

| 49537572083 | FRIENDS |

| 49609244333 | WORK |

| 49503961861 | FRIENDS |

| 49602697846 | SCHOOL |

| 49519090155 | SCHOOL |

| 49609344355 | YOGA |

|

⋮ | |

The large table is called ‘calls’ and contains information about every call made by users.

| user_id | date_time | phone | duration |

|---|---|---|---|

| 1 | 2016-06-22 16:19:23 | 49790200200 | 5 |

| 1 | 2016-06-22 16:27:23 | 49796574506 | 26 |

| 1 | 2016-06-23 10:26:11 | 49533835656 | 34 |

| 2 | 2016-06-23 12:31:56 | 49570747708 | 90 |

| 2 | 2016-06-23 12:54:38 | 49570747708 | 82 |

| 2 | 2016-06-23 12:56:15 | 49334415970 | 9 |

| 2 | 2016-06-23 14:39:10 | 49792082953 | 5 |

| 1 | 2016-06-23 17:01:36 | 49796574506 | 203 |

| 2 | 2016-06-23 18:15:05 | 49508432274 | 260 |

| ⋮ | |||

For simplicity reasons we did not serialize the data in Parquet. We would not expect any differences in the results for these queries.

Scenario 1: Aggregation on the table inside S3

First, we execute a simple aggregation on the table calls without any involvement of the phoneBook. We are summing the duration of all calls for a user:

|

1 2 3 4 5 |

SELECT user_id, SUM(duration) FROM S3.calls GROUP BY user_id; |

Execution plan:

|

1 2 3 4 |

XN Aggregate (cost=125000012.50..125000012.50 rows=1 width=8) -> XN S3 Query Scan calls (cost=125000000.00..125000010.00 rows=1000 width=8) -> S3 Aggregate (cost=125000000.00..125000000.00 rows=1000 width=0) -> S3 Seq Scan s3.calls location:"s3://sonra-sample-east/test" format:TEXT (cost=0.00..100000000.00 rows=10000000000 width=0) |

All operations that contain S3 in the name are executed in the RS Spectrum layer directly against the data in S3. In the plan, we can see that the aggregation was performed in the Spectrum layer (S3 Aggregate). Redshift just receives the aggregated resultset instead of the whole table. The amount of data that needs to travel across the network is minimal. No surprises here.

Scenario 2: Aggregation after federated join

Now let’s compute the total duration aggregated over the category. To achieve this, we need to join the tables on the phone field first. Phone is the primary key in the lookup table.

|

1 2 3 4 5 6 7 |

SELECT phoneBook.category, SUM(calls.duration) AS secs_total FROM S3.calls AS calls JOIN phoneBook ON (calls.phone = phoneBook.phone) GROUP BY phoneBook.category; |

Execution plan:

|

1 2 3 4 5 6 7 |

XN HashAggregate (cost=548920002.89..548920002.90 rows=6 width=12) -> XN Hash Join DS_BCAST_INNER (cost=2.89..498920002.89 rows=10000000000 width=12) Hash Cond: (("outer".phone)::text = ("inner".phone)::text) -> XN S3 Query Scan calls (cost=0.00..200000000.00 rows=10000000000 width=520) -> S3 Seq Scan s3.calls location:"s3://sonra-sample-east/test" format:TEXT (cost=0.00..100000000.00 rows=10000000000 width=520) -> XN Hash (cost=2.31..2.31 rows=231 width=22) -> XN Seq Scan on phonebook (cost=0.00..2.31 rows=231 width=22) |

No data from Redshift can be pushed into S3, therefore, the join of the tables was executed in Redshift as well as the aggregation. The whole table containing 3 million rows was transferred from S3 to Redshift. We need to transfer the whole dataset from S3 to Redshift. If we rerun the query, the data needs to be transferred again. For a very large transaction table this is probably not such a good idea. For federated aggregations query offload does not work.

Having said that we can rewrite our query somewhat to reduce the amount of data that needs to travel across the network.

Scenario 3: Join after aggregation

We can first aggregate by phone in the calls table, send the result to Redshift, join to phoneBook table in Redshift on the phone key, and perform a second level aggregation inside Redshift.

|

1 2 3 4 5 6 7 |

SELECT phoneBook.category, SUM(groupedCalls.duration) FROM phoneBook JOIN (SELECT phone, SUM(duration) AS duration FROM S3.calls GROUP BY phone) AS groupedCalls ON (phoneBook.phone = groupedCalls.phone) GROUP BY phoneBook.category; |

Execution plan:

|

1 2 3 4 5 6 7 8 9 10 |

XN HashAggregate (cost=223923011.39..223923011.40 rows=6 width=16) -> XN Hash Join DS_BCAST_INNER (cost=150003003.39..223923010.39 rows=200 width=16) Hash Cond: (("outer".phone)::text = ("inner".phone)::text) -> XN Subquery Scan groupedcalls (cost=150003000.50..150003003.00 rows=200 width=524) -> XN HashAggregate (cost=150003000.50..150003001.00 rows=200 width=524) -> XN S3 Query Scan calls (cost=150000000.00..150002000.50 rows=200000 width=524) -> S3 HashAggregate (cost=150000000.00..150000000.50 rows=200000 width=520) -> S3 Seq Scan s3.calls location:"s3://sonra-sample-east/test" format:TEXT (cost=0.00..100000000.00 rows=10000000000 width=520) -> XN Hash (cost=2.31..2.31 rows=231 width=22) -> XN Seq Scan on phonebook (cost=0.00..2.31 rows=231 width=22) |

The duration is summed by phone in the Spectrum layer and only after the join, it is summed over the category in Redshift. We send a lot less data across the network to Redshift. This trick only works with additive aggregations such as SUM, COUNT, AVG (SUM/COUNT) etc.

Scenario 4: Both tables in S3

We can test the second query in a similar situation, except that now both the tables reside in the S3 storage. We might expect the joining and aggregation to be executed entirely in the Spectrum layer. Let’s see what happens:

|

1 2 3 4 5 6 7 8 |

XN HashAggregate (cost=160300000450000000.00..160300000450000000.00 rows=200 width=406) -> XN Hash Join DS_BCAST_INNER (cost=225000000.00..157800000450000000.00 rows=500000000000000000 width=406) Hash Cond: (("outer".phone)::text = ("inner".phone)::text) -> XN S3 Query Scan phonebook (cost=0.00..200000000.00 rows=10000000000 width=804) -> S3 Seq Scan s3.phonebook location:"s3://sonra-sample-east/phonebook" format:TEXT (cost=0.00..100000000.00 rows=10000000000 width=804) -> XN Hash (cost=200000000.00..200000000.00 rows=10000000000 width=520) -> XN S3 Query Scan calls (cost=0.00..200000000.00 rows=10000000000 width=520) -> S3 Seq Scan s3.calls location:"s3://sonra-sample-east/test" format:TEXT (cost=0.00..100000000.00 rows=10000000000 width=520) |

We can see that Spectrum did not join the tables. It only performed a sequential scan of the tables and both tables were transferred to Redshift for joining. This outcome might be a bit surprising since the transfer of both tables seems unnecessary. We then set some basic statistics such as number of rows per table. This changed the hashing. Spectrum now knew that phoneBook is the smaller table and it hashed this table rather than the calls table. The join still happened in S3. Maybe our fact table was too small or Spectrum itself does not support joins.

Query Offload Summary

Offloading a fact table to Spectrum and keeping dimension tables in Redshift only works for artificial use cases where we aggregate by the dimension keys. In reality this rarely happens. In practical terms query offload does not work for fact table offloads as most of the time we need to send the whole fact table across to Redshift. One option here for Redshift would be to broadcast the dimension table to Spectrum and perform the join there prior to aggregation. We have shown you a trick that pushes down the first level of aggregation on the dimension key down to Spectrum and performs second level aggregations in Redshift. This has various limitations, e.g. it only works for additive aggregations, it requires subqueries and more awkward and less readable SQL. It might work for your scenario though. The conclusion here applies to all federated query engines. It is not only a limitation of Redshift Spectrum.

Spectrum did not join the dimension table to the fact table even after we set some basic statistics. Maybe our fact table wasn’t large enough. It had to pull both tables into Redshift and perform the join there. As a recommendation you should completely denormalize and flatten any data prior to querying it in Spectrum.

As a conclusion, query federation across Redshift and Spectrum only works for a very limited set of scenarios. When offloading data to S3 it is best to not only offload your fact table, but also to offload all of your dimension tables. On top of this, we recommend to completely flatten your data in S3 and avoid any and all joins. I have discussed de-normalization of dimensional models at length in my recent post on dimensional models in the age of Big Data. If you want to stitch results together for drill across then join the results of aggregated data across layers on conformed columns. You could probably achieve something similar with your BI tool.

Redshift Spectrum Concurrency and Latency

One very last comment. The Redshift Spectrum best practice guide recommends using Spectrum to increase Redshift query concurrency. This in my opinion is a very good use case as long as you follow our advice and can tolerate higher query latency for the queries you run against Spectrum. We performed some basic tests where we ran the same aggregate query multiple times in concurrent sessions. Query latency goes up in line with concurrency. Running one query took 5.5 s. Running 5 queries concurrently took 19.5 s. All five queries finished between 19 and 19.5 s. Once we went above 5 concurrent queries we saw that 4 queries finished quicker than the remaining queries. The difference was in the region of 25-30%, e.g. execution times for seven concurrent queries (all queries are the same) 18s, 18s, 18.5s, 19s, 23s, 27s, 27s. This could indicate that queries are queued once we go beyond a certain concurrency threshold. Based on input from Athena product management the level of concurrency is a soft limit, which can be raised for specific use cases.

As a side note, Redshift Spectrum does not seem to cache resultsets. Neither can it push down window functions into the Spectrum layer. Athena/Presto can run window functions. It’s early days though with this product. I would say let’s wait and see.