Streaming Tweets to Snowflake Data Warehouse with Spark Structured Streaming and Kafka

Streaming Tweets to Snowflake Data Warehouse with Spark Structured Streaming and Kafka

Streaming architecture

In this post we will build a system that ingests real time data from Twitter, packages it as JSON objects and sends it through a Kafka Producer to a Kafka Cluster. A Spark Streaming application will then consume those tweets in JSON format and stream them as mini batches into the Snowflake data warehouse. We will then show how easy it is to query JSON data in Snowflake. If you have XML data make sure to look at our other post where we load and query XML data to Snowflake with Flexter, our enterprise XML converter.

Table of Contents

About Snowflake

Snowflake is a analytic database provided as Software-as-a-Service (SaaS) that runs completely on Amazon Web Services (AWS). It is built for analytical queries of any size by utilizing the shared-disk architecture and columnar storage. It’s architecture separates compute from storage allowing you to elastically scale resources.

In Snowflake you do not pay for your stored data but you do pay for the compute engine. The compute engine has to be turned on only when you are interacting with your data. This means that you can turn off the compute engine in the hours that you are not using your Snowflake data warehouse. You can also easily scale the compute resources that are used by Snowflake. For example you can use four compute nodes for your once a month intensive queries and use two nodes for your daily ETL processes.

Snowflake also provides an elegant interface for running queries on semi-structured data such as JSON data which we will utilize in this post.

Preparing the Snowflake Database

The goal of this system is to stream real-time tweets in JSON format into our Snowflake data warehouse. We will start off by creating a simple table in Snowflake that will store our tweets in JSON format. Snowflake supports various semi-structured data format (JSON, Avro, ORC, Parquet, or XML). We are going to store data in JSON format as a special VARIANT data type.

|

1 |

CREATE TABLE tweets (JSON variant); |

In the following parts we will build a data pipeline to populate this table with real-time tweets.

Configuring Kafka

To use Kafka for message transmission we will need to start a Kafka Broker. A single Kafka Cluster can consist of multiple Kafka Brokers where each broker takes a part of the message transmission load. To start a Kafka Broker we have to first start Zookeeper (which is used for coordination) and then the Kafka Broker. We can do that with the following two command line statements:

|

1 2 |

zkServer start kafka-server-start /usr/local/etc/kafka/server.properties |

Now that the Kafka Broker is running on our machine it can be accessed on the local host address at port 9092.

Ingesting real time data from Twitter

We will use Twitter’s Developer API to search for patterns in the text of all public tweets. Specifically, we are going to search for tweets containing the keyword “ESPN”, but you can search for any pattern.

In Python we can easily access the Twitter Developer API using the tweepy library:

|

1 2 3 4 5 6 7 8 9 10 11 12 |

mport tweepy # twitter setup consumer_key = "<your consumer key>" consumer_secret = "<your consumer secret>" access_token = "<your access token>" access_token_secret = "<your access token secret>" # Creating the authentication object auth = tweepy.OAuthHandler(consumer_key, consumer_secret) # Setting your access token and secret auth.set_access_token(access_token, access_token_secret) # Creating the API object by passing in auth information api = tweepy.API(auth) |

Using the kafka-python library we are going to define a Kafka Producer that sends messages to the appropriate topic of our Kafka Broker:

|

1 2 3 |

from kafka import KafkaProducer producer = KafkaProducer(bootstrap_servers='localhost:9092') topic_name = 'data-tweets' |

Since we did not define the topic “data-tweets” beforehand, it will automatically be created by the producer.

We can get the result of a search on tweets using:

|

1 2 3 |

res = api.search("ESPN") for i in res: # do something with tweet |

The result contains almost 50 fields in JSON format but we want to focus only on some important fields of a tweet. Here is a Python list containing only the relevant attributes of a tweet:

|

1 |

important_fields = ['created_at', 'id', 'id_str', 'text', 'retweet_count', 'favorite_count', 'favorited', 'retweeted', 'lang'] |

In the next step we will define a function that:

- Queries the Twitter API for our search pattern

- Extracts the important fields from the original tweet

- “Cleans” the text attribute of a tweet because they contain characters such as: ‘ , “ and \n which Snowflakes struggles with when parsing JSON tweets (this is currently a small limitation)

- Transforms the cleaned tweet into a JSON object and sends it to the Kafka Broker in binary format

|

1 2 3 4 5 6 7 |

import json def get_twitter_data(): res = api.search("ESPN") # query twitter API for tweet in res: # iterate over results json_tweet = {k: tweet._json[k] for k in important_fields} json_tweet['text'] = json_tweet['text'].replace("'","").replace("\"","").replace("\n","") producer.send(topic_name, str.encode(json.dumps(json_tweet))) |

Lastly we will define a function that will call the ‘get_twitter_data()’ function to ingest data and run indefinitely:

|

1 2 3 4 |

def periodic_work(interval): #interval is the number of seconds to wait while True: get_twitter_data() time.sleep(interval) |

Intro to Spark Structured Streaming

Structured Streaming is the new streaming model of the Apache Spark framework. The main idea is that you should not have to reason about streaming, but rather use a single API for both streaming and batch operations. Thus it allows you to write batch queries on your streaming data.

It was inspired by The Dataflow Model invented by Google and Google open sourcing it’s Cloud Dataflow SDK as the open source project Apache Beam. Structured Streaming also finally adds the support for end-to-end exactly-once fault-tolerance guarantees through checkpointing and Write Ahead Logs. This is a very important feature also previously implemented by streaming engines Apache Flink and Apache Beam.

In Spark 2.2.0 (which we are using for this blog post) Structured Streaming was released in General Availability mode and will soon replace the old streaming engine that was based on Resilient Distributed Datasets.

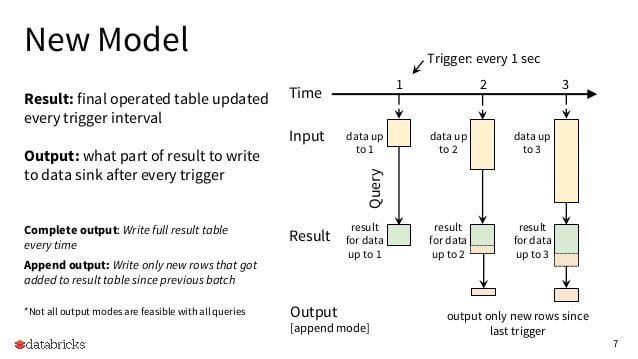

The new model treats streaming data as unbounded dataframe to which data is appended. This model is comprised of several components:

- Input – defines the ever increasing dataframe which a data source populates

- Trigger – defines the time between checking for new Input and then running the Query on the new data

- Query – implements operations on input data such as select, group by, filter…

- Result – the result of running the Query on Input

- Output – defines what part of Result to write to the sink

The following picture made by Databricks also graphically summarizes the above mentioned parts of a Structured Streaming architecture:

The picture focuses on a non-aggregation query and using the Append mode as deifinition of Output. Please consult the Spark Structured Streaming guide for a better overview of the features and in-depth explanation of how the Query and Output interact.

Using Spark Structured Streaming to deliver data to Snowflake

We are going to use Scala for the following Apache Spark examples. We have to start off by defining the usual Spark SQL entry point:

|

1 2 3 4 5 |

val spark = SparkSession .builder() .appName("Spark Structured Streaming to Snowflake") .master("local[*]") .getOrCreate() |

Then we will define a stream that reads data from the “data-tweets” topic of our Kafka Broker:

|

1 2 3 4 5 6 7 |

val data_stream = spark .readStream // constantly expanding dataframe .format("kafka") .option("kafka.bootstrap.servers", "localhost:9092") .option("subscribe", "data-tweets") .option("startingOffsets","latest") //or earliest .load() |

With Spark we can easily explore the schema of incoming data:

|

1 |

println(data_stream.schema) |

And we get the following result:

|

1 2 3 4 5 6 7 8 9 |

StructType( StructField(key,BinaryType,true), StructField(value,BinaryType,true), StructField(topic,StringType,true), StructField(partition,IntegerType,true), StructField(offset,LongType,true), StructField(timestamp,TimestampType,true), StructField(timestampType,IntegerType,true) ) |

Our tweets are stored only in the “value” field. The other fields are related to Kafka messaging protocol. Our goal is to extract only the “value” column, encode it as a string and export it to Snowflake using a JDBC connection.

Using the Spark Structured Streaming API we can implement our Structured Streaming model by:

- Using the (above defined) “data_stream” as source of data

- Running a simple select query every minute

- Implementing a select query that casts the binary message as a string

- Using the append output mode to only output the newest data in the result set to Snowflake.

|

1 2 3 4 5 6 7 |

val query = data_stream .selectExpr("CAST(value AS STRING) as json_data") .writeStream .foreach(writer) .trigger(Trigger.ProcessingTime("30 seconds")) .outputMode("append") // could also be append or update .start() |

The above mentioned variable “writer” will represent the Snowflake database ie. a JDBC sink. Since JSON data has to be first parsed by Snowflake’s engine we will write have to write a custom JDBC sink that utilizes Snowflake’s JDBC connector and specific function “parse_json()” for parsing JSON strings into VARIANT data type. Snowflake also supports other methods for loading data which are better performing but only suitable for batch data loading.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 |

class JDBCSink(url: String, user:String, pwd:String) extends org.apache.spark.sql.ForeachWriter[org.apache.spark.sql.Row]{ val driver = "net.snowflake.client.jdbc.SnowflakeDriver" var connection:java.sql.Connection = _ var statement:java.sql.Statement = _ def open(partitionId: Long, version: Long):Boolean = { Class.forName(driver) connection = java.sql.DriverManager.getConnection(url, user, pwd) statement = connection.createStatement true } def process(value: org.apache.spark.sql.Row): Unit = { statement.executeUpdate( "insert into Tweets " + "select parse_json(column1) " + "from values ( '" + value(0) + "');") } def close(errorOrNull:Throwable):Unit = { connection.close } } |

As you can see Spark allows simple creation of custom output sinks. The only complex part was using the “parse_json()” function to ingest the JSON data as VARIANT type.The above mentioned variable “writer” will represent the Snowflake database ie. a JDBC sink. Since JSON data has to be first parsed by Snowflake’s engine we will write have to write a custom JDBC sink that utilizes Snowflake’s JDBC connector and specific function “parse_json()” for parsing JSON strings into VARIANT data type. Snowflake also supports other methods for loading data which are better performing but only suitable for batch data loading.

In our code we will instantiate the previously mentioned “writer” JDBC sink by simply using the class we just created:

|

1 2 3 4 5 6 |

val user="<your username>" val pwd="<your password>" val db="<your database name>" val wh="<your warehouse name>" val url= "jdbc:snowflake://<account_name>.<region_id>.snowflakecomputing.com/?user="+user+"&password="+pwd+"&db="+db+"&warehouse="+wh val writer = new JDBCSink(url, user, pwd) |

The whole Spark Streaming pipeline looks as following:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 |

val user="<your username>" val pwd="<your password>" val db="<your database name>" val wh="<your warehouse name>" val url="jdbc:snowflake://<account_name>.<region_id>.snowflakecomputing.com/?user="+user+"&password="+pwd+"&db="+db+"&warehouse="+wh val writer = new JDBCSink(url, user, pwd) val spark = SparkSession .builder() .appName("Spark Structured Streaming to Snowflake") .master("local[*]") .getOrCreate() val data_stream = spark .readStream // constantly expanding dataframe .format("kafka") .option("kafka.bootstrap.servers", "localhost:9092") .option("subscribe", "data-tweets") .option("startingOffsets","latest") //or earliest .load() val query = data_stream .selectExpr("CAST(value AS STRING) as json_data") .writeStream .foreach(writer) .trigger(Trigger.ProcessingTime("30 seconds")) .outputMode("append") // could also be append or update .start() |

Querying the data in Snowflake

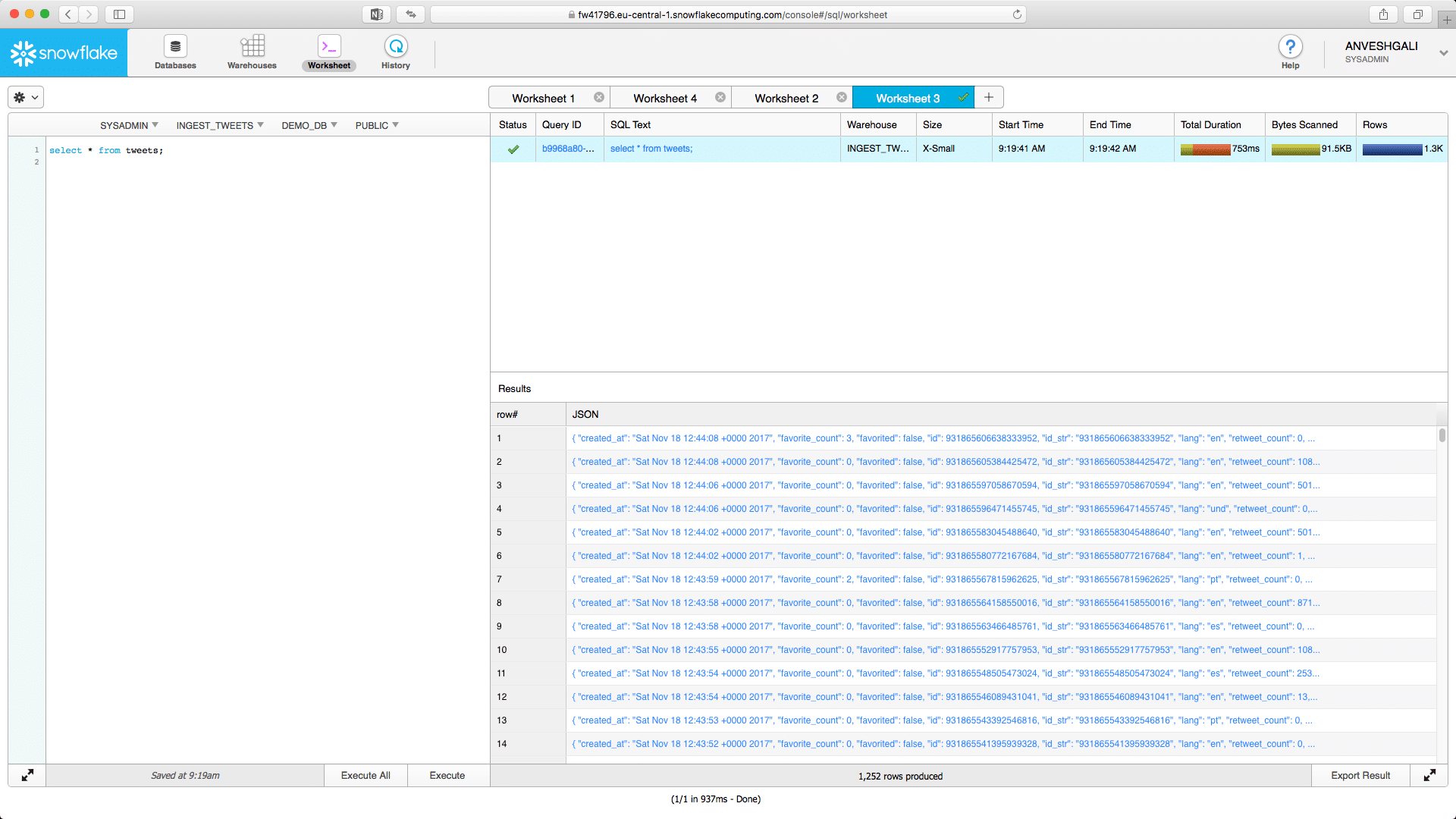

After our data is in Snowflake we can easily query it within the web interface after provisioning a warehouse (compute resources).

First we will run a select query to check the contents of our “Tweets” table:

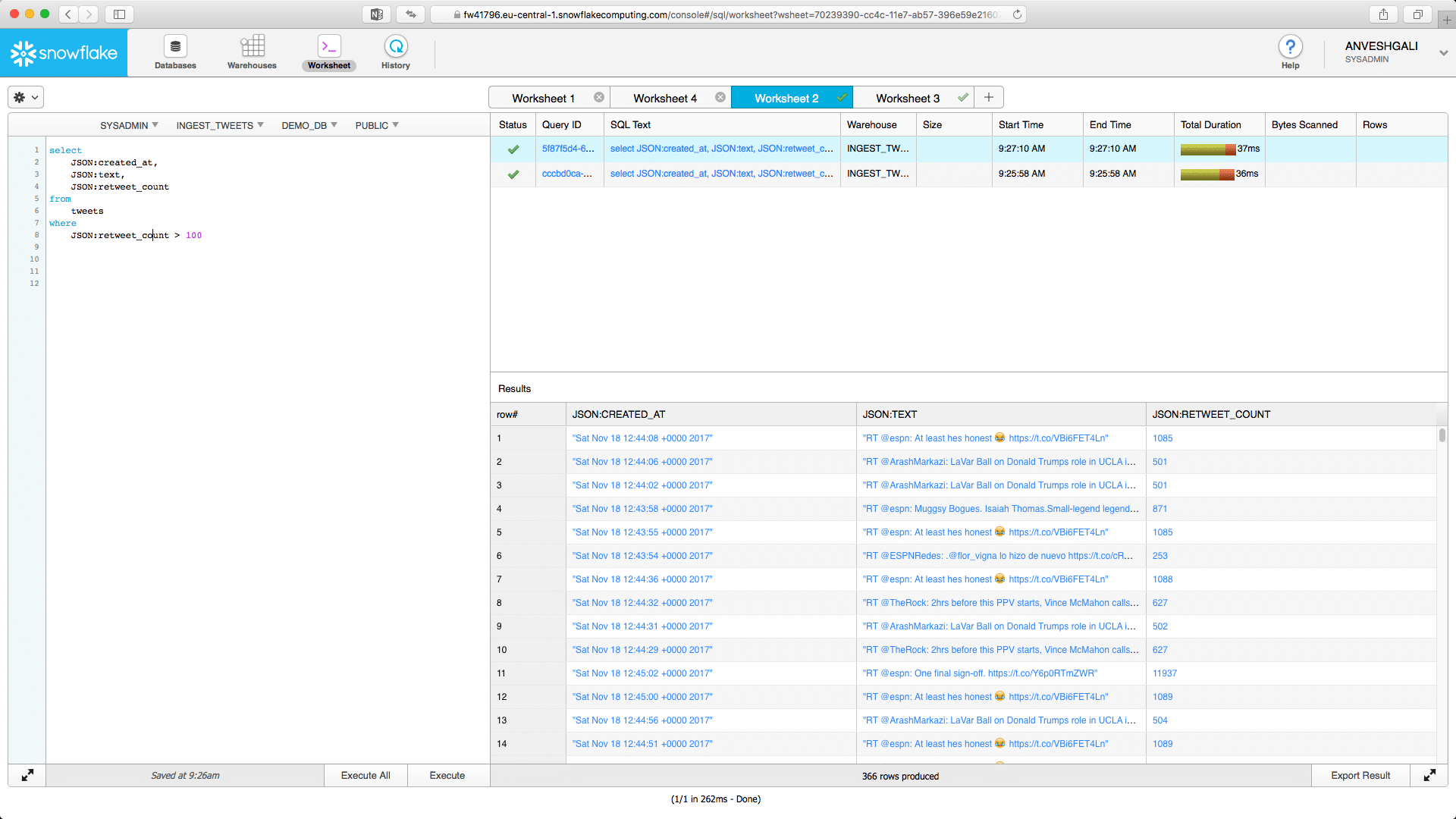

Lastly we will run a query that only extracts specific JSON attributes of a tweet and filters the tweets based on number of retweets:

In this post we have shown you how easy it is to load and query JSON in Snowflake. Also make sure to look at our other post where we load and query XML data to Snowflake with Flexter, our enterprise XML converter.

Enjoyed this post? Have a look at the other posts on our blog.

Contact us for Snowflake professional services.

We created the content in partnership with Snowflake.