The Snowflake data platform for data engineering, data preparation, and ETL

Overview

Did you know that the Snowflake data platform is the perfect fit for ETL, data integration, and data preparation tasks?

In this blog post we will give you some hands-on advice on how you can use Snowflake as an ETL engine for data preparation.

Let’s take this common scenario as an example:

Your company has built a data lake on object storage, e.g. S3. The raw data on your data lake requires transformations. Let’s say you want to build the features for a predictive model. With Snowflake you can create an external table over your data on S3, apply the transformations for feature engineering and then unload the data back to S3 in Parquet for downstream processing by your data science platform. There couldn’t be a better fit than Snowflake for the job.

Table of Contents

Benefits of using Snowflake for data engineering

- Snowflake follows a utility billing model. You only pay for the resources you use. If your ETL job takes 2 minutes to run you only pay for those 2 minutes. If it takes 3 hours you pay for 3 hours.

- Snowflake implementation can scale your ETL workload across hundreds or even thousands of nodes. This is important because you can meet any SLA as the platform scales linearly for most transformations. Let’s assume your ETL job runs for 10 minutes on a cluster with 4 nodes (Medium sized cluster). By doubling the cluster size to 8 nodes you can cut the processing time in half. And the best thing is that you don’t pay a cent more as your job now runs in 5 minutes and you only pay for what you use. This is magic.

- Unlike other platforms, Snowflake scales instantly. You don’t have to wait for minutes before your cluster becomes available and your ETL job starts running.

- Writing code for data transformations on Snowflake is significantly faster than on other platforms. You also don’t need expensive engineers with skills in low level programming languages. Just use the power of SQL, the lingua franca of data. I have not yet come across a requirement that can not be met by writing pure SQL. I challenge you to give me an example where SQL can’t be used to implement your business logic.

- The Snowflake data platform is a fully managed service. You don’t need any resources to administer or maintain the platform.

- You can easily spin off sandboxes for data exploration, data discovery, and data science using the zero copy clone feature. This feature can copy Terabytes of data in less than a minute.

- You don’t need to license an expensive point and click ETL tool. Just run your workload directly on Snowflake.I have recently outlined the limitations of point and click tools in a presentation at the Knowledge Gap conference and also written up a blog post. At Sonra we use Apache Airflow together with Snowflake for data warehouse automation.

- Snowflake supports big data formats such as Parquet, Avro, ORC, which are commonly used in data lakes.

Snowflake for data preparation

In this scenario we have a data set on our data lake on S3. This data can be accessed by multiple consumers, e.g. Spark for data science and Snowflake for data preparation and data warehousing.

Using a Snowflake external table over the data set on S3 will allow us to query the data, run some transformations for data preparation (feature engineering) on Snowflake and then unload the data back to S3 in Parquet. From there it can be consumed by our data scientists using Spark or some other data science platform.

In summary:

- We create an external table over the data set in Snowflake. This will allow us to query the data set on S3 directly from Snowflake.

- We then apply some transformations and data preparation steps against the external table on Snowflake using SQL.

- In a last step we unload the data set back to S3 in Parquet format.

- Our data science team can use Spark to consume the Parquet data on S3.

Along the way we give you tips and tricks of working with external tables and Parquet.

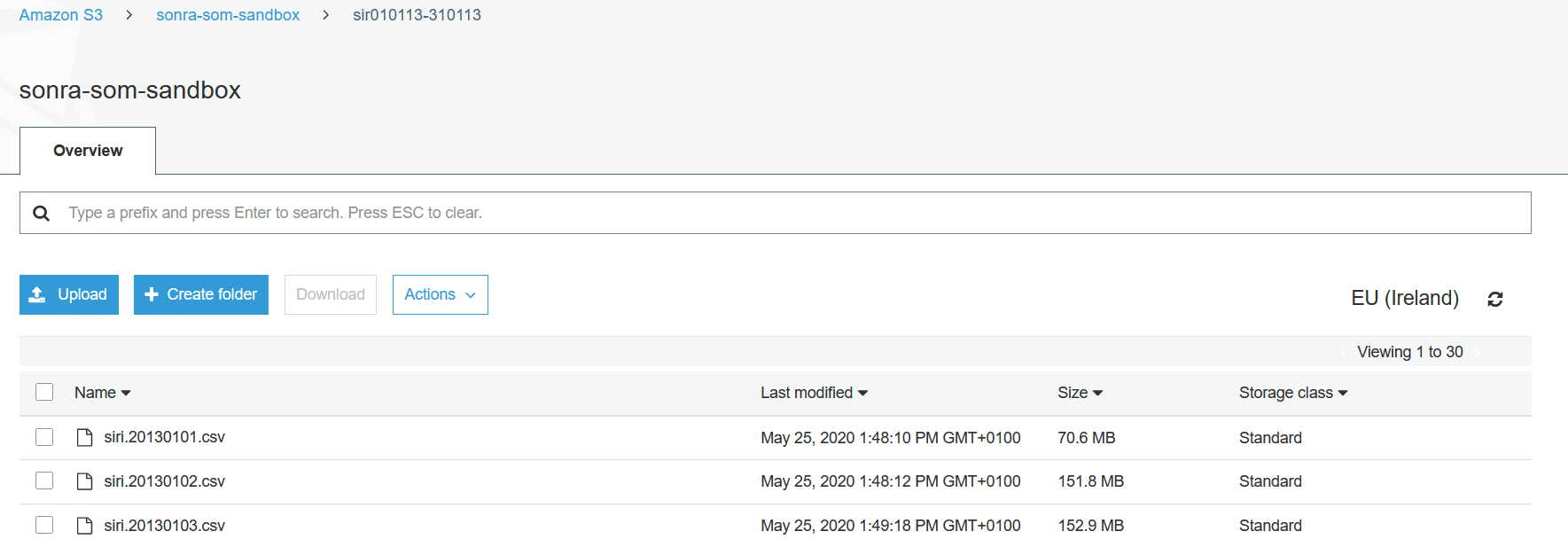

Data set on S3

The data that we are using for our purpose is GPS sample data from Dublin City Council’s Public sector information. The dataset that we have chosen is from 1st January 2013 to 31st January 2013 and can be found on this link. The dataset basically contains the travel history of the Dublin Bus services which is captured by the onboard GPS machines. This includes details like whether a bus reaches a particular stop on time, ahead of time or on a delay along with the date of journey and coordinates in latitude and longitude.

The files are in csv format, with a total of 31 files for each day of the month. Each CSV has the following columns:

- Timestamp micro since 1970 01 01 00:00:00 GMT’

- Line ID

- Direction

- Journey Pattern ID

- Time Frame (The start date of the production time table – in Dublin the production time table starts at 6am and ends at 3am)’

- Vehicle Journey ID (A given run on the journey pattern)’

- Operator (Bus operator, not the driver)’

- Congestion [0=no,1=yes]’

- Lon WGS84′

- Lat WGS84′

- Delay (seconds, negative if bus is ahead of schedule)’

- Block ID (a section ID of the journey pattern)’

- Vehicle ID’

- Stop ID’

- At Stop [0=no,1=yes]

We have imported all the files to an S3 bucket in AWS. We will be fetching the same from S3 to an external table on Snowflake for our further use. Below we can see how the data looks in S3:

External tables on Snowflake

External Table

Unlike a typical table in SQL where the data is stored physically in the database, external tables are used to store the data in a stage. The external table feature is available in Snowflake as well. It stores the file-level metadata about the data files, which in turn allows the user to query data stored in these files as if we had an actual table with the data in it.

A few key advantages of using external tables:

- Decentralised and distributed loading of scalable data warehouse. The user can load the external data wherever they want and then simply copy the table or partition to the location where the DBMS expects it. This also makes sure that the new table is able to sync with the expected schema.

- External tables facilitate the same table to be used from multiple databases, depending on which database the query is originating from. Hence the table can be joined with other local tables in such databases. For example, if we have an external file of ORC, CSV etc. we can upload the same on Hive DBMS, SQL Server, Snowflake, ORACLE or any such DBMS. Now each/any of these databases can merge their local tables with the shared external tables.

- It is extremely useful if the situation requires read-only analytical use of the data stored in a particular location. Instead of having to load the entire data into an actual table occupying space in the database, the external stage lets the user perform transformations, calculations and other analysis as per requirement.

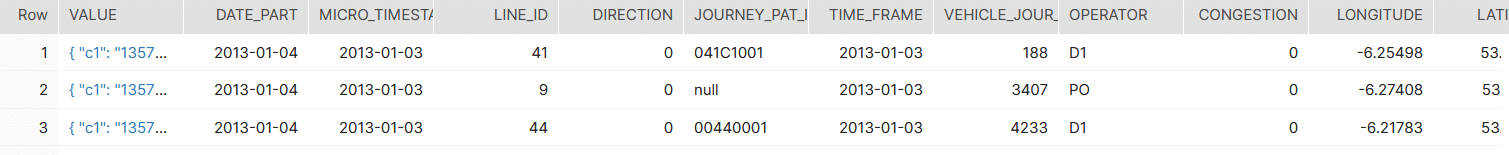

An external table includes the following columns:

- VALUE: It is a VARIANT type column that represents a single row in the external file.

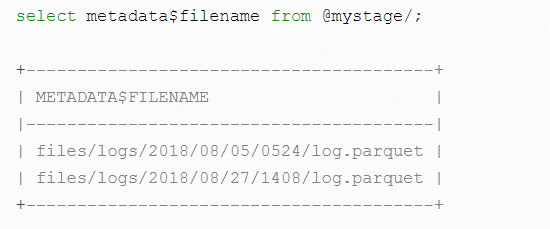

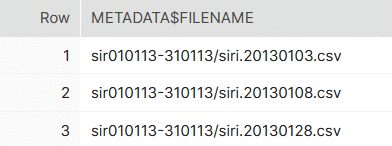

- METADATA$FILENAME: A pseudocolumn that identifies the name of each staged data file included in the external table, including its path in the stage. A sample pseudocolumn would look like this:

The above sample example (source) gives us an idea of how we can use the file path as the source of our partitioning information as the folders are structured by date.

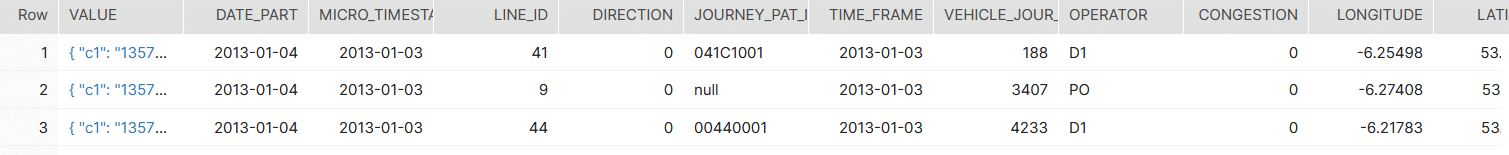

- Virtual Columns: If the user is familiar with the schema of the source data file, then you can create additional virtual columns as expressions using the VALUE column and/or the METADATA$FILENAME pseudocolumn. For example, in our case we have 15 columns in the CSV. Hence the same columns can be repeated in the external table as well and they would be the virtual columns.

In the above example we can see the VALUE column and the other virtual columns as well.

Partitioning External Tables in Snowflake

Users should also remember that Snowflake by default provides the feature of micro partitioning and data clustering for stored data. One of the main features of an external table is the manual partitioning access and it is highly recommended to do so as well. Let us take a look at some of the key benefits from partitioning of external tables:

- Table partitioning helps first and foremost to break down huge quantities of data into smaller chunks. Separation into smaller parts improves management and maintenance abilities in almost every DBMS architecture.

- Query performance is largely improved. Instead of querying the entire data set for the results, queries can be modified to search only a certain partition which increases efficiency. This process is called partition pruning.

This process requires that the source data for the table is organised by a logical path, e.g. date, time, country or a similar attribute. The external table can be divided into multiple partitions based on such information.

Partition columns must evaluate as expressions which parse the path and /or the filename information stored in the METADATA$FILENAME pseudocolumn. Based on the information available, Snowflake datalake architecture allows an external table to have multiple partition columns if required giving the user a multi-dimensional structure to the data.

Let us look at the steps to create our own stage table for the data that we are using:

- Create an S3 Stage : Snowflake allows us to create a named external stage which captures all the information required for the staging files, including:

- The S3 bucket where the files are staged.

- The named storage integration object or S3 credentials for the bucket (if it is protected).

- An encryption key (if the files in the bucket have been encrypted).

Query to create the external stage:

|

1 2 3 |

CREATE OR replace stage mystage url='s3://sonra-som-sandbox/sir010113-310113/' credentials=(aws_key_id='*****' aws_secret_key='*****') encryption=(master_key = '*********') file_format = my_csv_format; |

The encryption details and file_format property are optional requirements for the query.The credentials are absolutely essential as without them AWS doesn’t let the user access to the content of the bucket.

2. Query the METADATA$FILENAME: The psecodulumn will contain the information based on which we can partition our external column.

Query:

|

1 |

select metadata $ filename from @mystage/ group by 1; |

Output:

As we can see, the pseudocolumn gives us the path and the filename using either of which we can partition our external table. For our purpose we will be using the csv file names as they are already named with date.

3. Create the external Table: We are going to use the pseudocolumn to partition our external table and the virtual columns for all the 15 columns of the csv files. Let’s look at the query:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 |

create external table dublin_bus_gps(date_part date as to_date(substr(metadata $ filename, 23, 8), 'YYYYMMDD'), micro_timestamp date as (value:c1::date) , line_id number as (value:c2::number) , direction number as (value:c3::number) , journey_pat_id varchar as (value:c4::varchar) , time_frame date as (value:c5::date) , vehicle_jour_id number as (value:c6::number) , operator varchar as (value:c7::varchar) , congestion number as (value:c8::number) , longitude number(8, 5) as (value:c9::number(8, 5)) , latitude number(8, 5) as (value:c10::number(8, 5)) , delay number as (value:c11::number) , block_id number as (value:c12::number) , vehicle_id number as (value:c13::number) , stop_id varchar as (value:c14::varchar) , at_stop number as (value:c15::number))partition by (date_part) with location = @mystage/ file_format = (type = csv); |

In the above query the date_part casts the YYYYDDMM in the METADATA$FILENAME pseudocolumn as a date using the TO_DATE function. We have also specified the format of the files as csv. Once we execute the statement, the external table will be created and we can check the output with the following SQL:

Query:

|

1 |

select * from dublin_bus_gps limit 1000; |

Output:

Data preparation with SQL

With an external table we can perform all kinds of read only operations on the data. Hence, it is very useful to gain some information about the data. We can also create some transformations on the data. Let us look at a few examples of the same here:

Example 1: The first one is the use of a Lateral join in Snowflake. We will join an existing table in the Snowflake DB with the external table to check what employee details match with the line ID from the external table. The LATERAL keyword here allows the sub select access to the columns of the FROM clause that appear before it in the FROM list.

Query:

|

1 2 3 4 5 6 7 8 |

SELECT * FROM dublin_bus_gps AS d, lateral ( select * from employee AS e WHERE e.employeeid = d.line_id) AS iv2 ORDER BY employeeid; |

Example 2: Let’s look at a window function – Dense_Rank in Snowflake. We would like to look at how we can partition/group the data by line ID and inside the partitions we would want to know which stops have the highest delay in bus timings. Let’s take a look at the query for say 5000 records from the data set:

|

1 2 3 4 5 6 7 |

SELECT micro_timestamp, line_id, stop_id, operator, delay, Dense_rank() OVER(partition BY line_id ORDER BY delay DESC) AS highest_delay_rank FROM dublin_bus_gps limit 5000; |

Example 3: For this example let’s look at another window function from Snowflake – AVG. Now for lets say 10000 rows of data we want to see in a decreasing order of daily time frame the average amount of delay on the bus stops which fall on a particular journey ID.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 |

SELECT time_frame, vehicle_jour_id, stop_id, delay, avg_delay FROM ( SELECT time_frame, vehicle_jour_id, stop_id, delay, (Avg(delay) OVER (partition BY stop_id ORDER BY time_frame DESC)) AS avg_delay FROM dublin_bus_gps WHERE journey_pat_id LIKE '%01500001%' limit 10000 ) AS delay_data; |

Example 4: If we want to create a table in the Snowflake Database using the external table as a source then all we need to do is write a simple CTAS statement.

|

1 2 3 |

CREATE TABLE parquet_file_table AS (SELECT * FROM dublin_bus_gps); |

Unload data to Parquet

In this section we will take a look at the steps to unload the data to Parquet on S3. We will be aiming at this in two ways. Snowflake offers some excellent guidelines on loading and unloading Parquet files and our steps will be based on them. Snowflake also offers the user the feature to define their own custom file size when using the following processes based on COPY_INTO. By default the size is set at 15 MB upto a maximum of 5 GB in Amazon S3 or Google Cloud Storage stage for this command that we will use. The command has a keyword “MAX_FILE_SIZE” which can be used to set the same as required.

Process 1:

- We take all the data in the external table and transfer it into a temporary table.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 |

CREATE OR replace temporary TABLE parquet_file_table AS ( SELECT micro_timestamp, line_id, direction, journey_pat_id, time_frame, vehicle_jour_id, operator, congestion, longitude, latitude, delay, block_id, vehicle_id, stop_id, at_stop number FROM dublin_bus_gps); |

- Now we just use this temporary table to transfer all the data into S3 with a COPY INTO command.

|

1 2 3 |

COPY INTO 's3://sonra-som-sandbox/parquet-files/' FROM (SELECT * FROM parquet_file_table ) file_format = (TYPE = parquet) header = TRUE credentials = (aws_key_id = '******' aws_secret_key = '******' ); |

Process 2: We directly use the data from the external table and transfer it to the S3 bucket:

|

1 2 3 4 5 |

COPY INTO 's3://sonra-som-sandbox/parquet-files/' FROM (SELECT micro_timestamp, line_id, direction, journey_pat_id, time_frame, vehicle_jour_id, operator, congestion, longitude, latitude, DELAY, block_id, vehicle_id, stop_id, at_stop NUMBER FROM dublin_bus_gps) file_format = (TYPE = parquet) header = TRUE credentials = (aws_key_id = '*****' aws_secret_key = '*******' ); |

Instead of providing the S3 bucket URL a separate stage can be created for the same and can be used in the query as well. So we can rewrite our first query as:

|

1 2 3 |

COPY INTO @mystage/ FROM (SELECT * FROM parquet_file_table ) file_format = (TYPE = parquet) header = TRUE credentials = (aws_key_id = '******' aws_secret_key = '******' ); |

Conclusion

We all know that Snowflake is a formidable platform when it comes to data warehousing.

In this article we have also shown what a great choice it is for data engineering tasks such as data preparation, feature engineering and ETL.

You can use Snowflake external tables to query your data on your data lake on object storage on AWS, Azure, or GCP for data exploration and data discovery purposes.

You can use the Snowflake engine to run transformations of any complexity including feature engineering and statistical analysis. Snowflake has excellent support for advanced SQL features, sampling, and statistical functions.

Once we have transformed the data we can unload it to object storage to Parquet for downstream processing and querying.

I hope you enjoyed this blog post. Please contact us if you want us to blog about a particular Snowflake topic. Until next time. The Sonra Snowflake team.

We created the content in partnership with Snowflake.

Enjoyed this post? Have a look at the other posts on our blog.

Contact us for Snowflake professional services.