Using subqueries in Oracle Data Integrator (ODI) interfaces for complex data integration requirements

Get our e-books Discover the Oracle Data Integrator 11g Repository Data Model and Oracle Data Integrator Snippets and Recipes

Oracle Data Integrator does not have any built in functionality for subqueries in interfaces. This is one of the reasons why you will find people claiming that ODI has shortcomings when dealing with complex transformations.

FlowForward.

All Things Data Engineering

Straight to Your Inbox!

Shortcomings of existing workarounds

In the ODI community various workarounds have been suggested to address this. The following discussion thread on OTN summarises these efforts.

1) Use of WHERE EXISTS in the predicate

2) Use of a View that contains the complex subquery transformation logic as the source datastore.

3) Use of a series of temporary (yellow) interfaces.

While all of these are valid workarounds each of the above approaches has drawbacks of its own.

The use of WHERE EXISTS only addresses a small subset of requirements. Using views has one big disadvantage. You will lose data lineage. By using views you will not be able to trace mappings from source to target and as a result lose one of the advantages that an ETL tool offers over scripting. In theory performance should not suffer as Oracle uses predicate pushing and view merging. Tom Kyte has written an excellent article about this for Oracle Magazine a while back if you require more information (look for the section on Views and Merging/Pushing at the bottom of the article). The third option is to use a series of temporary interfaces. However, this has the disadvantage of physically setting down and materialising the resultset for each subquery, rather than processing everything in memory. Depending on the circumstances, you may experience degradation in performance.

Proposed workaround

I propose another workaround here that addresses all of the above shortcomings. We will use a combination of a temp (yellow) interface and a custom knowledge module to address the issues. Each subquery in our complex transformation requirement will be assigned to a temporary interface. I have written a custom integration knowledge module that will store the SQL of the subquery for each temporary interface in a table. We then stick all of our temp interfaces with the subqueries into a package and then let the knowledge module combine the individual subqueries into one complex query before loading the data into our target table. Take the following as an example. We have a requirement to rank the Top 10 purchasing customers and insert them into a target table. In SQL this can be done by using the rank() analytic function in a subquery and then filtering on this subquery. In our solution this will translate into two interfaces. One temp interface for the subquery with the analytic function. Our custom KM will store the SQL for this subquery in a table. We will then need a second (non-temp) interface that filters on the top 10 purchasing customers, combines the two queries, executes the resulting complex query, and inserts the resultset into the target table.

This is is just a prototype at the moment to demonstrate that it is possible to execute complex transformations in ODI. At the moment the solution is specific to Oracle. I intend to rewrite parts of the knowledge module using Jython arrays to store the subqueries over the next couple of weeks to make the solution technology agnostic.

The subquery knowledge module

Before I give a working example I want to give a brief overview on the custom knowledge module that is our work horse.

Step 1. Create table that stores the SQL for the subqueries.

Nothing spectacular here. We create a table that will hold the SQL for our subqueries. The data type for the sql query column is a CLOB (this will become relevant in a later step). We also have a session number column to store the ODI session number for each subquery. This will allow us to concurrently use the knowledge module.

|

1 2 3 4 5 6 7 |

CREATE TABLE SQL_QUERY ( QUERY CLOB, IDENTIFIER VARCHAR2(30 BYTE), SESS_NO NUMBER, CREATE_DATE TIMESTAMP(6) ) |

Step 2: Store SQL pieces for subquery in Jython variables

In step two we store the SQL pieces for the subquery in Jython variables (note Jython as the technology). We retrieve the individual SQL components via the ODI substitution API.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 |

v_collist = """<%=snpRef.getColList("", "t[EXPRESSION]", ",n", "", "")%>""" v_query = """select <%=snpRef.getPop("DISTINCT_ROWS")%> <%=snpRef.getColList("", "t[EXPRESSION] AS [CX_COL_NAME]", ",n", "", "")%> from <%=odiRef.getSrcTablesList("", "([TABLE_NAME])", ", ", "")%> where (1=1) <%=snpRef.getJoin()%> <%=snpRef.getFilter()%> <%=snpRef.getJrnFilter() %>%s""" if v_collist.find('OVER') == -1: v_group_by = """<%=snpRef.getGrpBy()%><%=snpRef.getHaving()%>""" else: v_group_by ="" v_query = v_query % v_group_by |

Make sure that you use the tab key for indentation. This seems to be a peculiarity of Jython.

Note also that I have built in a simple check for the presence of analytic functions into the code. If we find an analytic function in the source to target column mappings we do not generate the GROUP BY SQL piece as otherwise an error will be thrown. This simple check is missing from all of the out of the box knowledge modules. As a result analytic functions such as SUM() OVER, MAX() OVER etc. can not be used in interfaces. I consider this a bug in the getGrpBy() API substitution method in ODI.

There is a clever workaround for the above described in Metalink note 807527.1

Step 3: Debug subquery

In this step we build a debugging mechanism into the knowledge module. We use the Jython raise function to throw an exception and print out the value of the v_query variable to the Operator. This will allow us to quickly review the value of the generated SQL in the Operator module and if necessary allow us to debug it.

Note that you have to select the Ignore Errors checkbox. Otherwise execution of the interface will terminate at this step (after all we are forcefully raising an error).

Step 4: Store SQL for subquery in table

In this step we will use the Jython technology to store the SQL for the subquery together with the ODI session number in a table. We use a Jython stored procedure call to accomplish this.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 |

import java.sql as sql import java.lang as lang import java.sql.Types as types myCon = snpRef.getJDBCConnection("SRC") try: inVar1 = v_query inVar2 = '<%=odiRef.getTargetTable("TABLE_NAME")%>' inVar3 = <%=odiRef.getSession("SESS_NO")%> myStmt = myCon.prepareCall('begin LOAD_SQL_QUERY (?,?,?); end;'); ## Bind parameters (IN parameters) myStmt.setString(1, inVar1); myStmt.setString(2, inVar2); myStmt.setInt(3, inVar3); ## Execute the callable statement myStmt.execute(); finally: inVar1 = 'myVar1'; |

Note that you have to use indentation using your tab key exactly as in the screenshot as otherwise an error will be thrown. Have a look at 424207.1 for more information on calling stored procedures from Jython.

Code for the stored procedure load_sql_query is as follows:

|

1 2 3 4 5 6 7 |

CREATE OR REPLACE PROCEDURE load_sql_query (p_query_value IN CLOB,p_identifier IN VARCHAR2,p_sess_no IN NUMBER) IS BEGIN INSERT INTO sql_query VALUES (REPLACE(p_query_value,CHR(10)||CHR(10),''),p_identifier,p_sess_no,SYSTIMESTAMP); COMMIT; END; / |

Step 5: Create complex query from individual subqueries

We only execute this step once we have run all of our subqueries. This step takes all of the subqueries and creates our final complex query. Condition for executing this step is that we are dealing with a non-temporary table that is a normal (non-temp) interface.

Command on Source

|

1 |

SELECT NVL('<%=odiRef.getTargetTable("TABLE_TYPE")%>','Yellow') AS table_type FROM dual |

The getTargetTable method returns ‘T’ for a non temp target table and NULL for a temp target table.

Command on Target

|

1 2 3 4 5 6 7 8 |

BEGIN -- INSERT INTO target_table VALUES ('#table_type', <%=odiRef.getSession("SESS_NO")%>); --COMMIT; -- Only execute if target table is not of type temporary IF '#table_type' = 'T' THEN sql_substitute (<%=odiRef.getSession("SESS_NO")%>); END IF; END; |

We only execute this step if the variable table_type is of type non-temp, that is equals T.

If we are dealing with a non-temp table and this is our final step in our complex transformation we generate the final complex query from the underlying subqueries by executing a stored procedure.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 |

CREATE OR REPLACE PROCEDURE sql_substitute (p_sess_no IN NUMBER) -- exec target_sql; IS final_raw_query CLOB; CURSOR c_iterator_outer IS SELECT query, identifier, create_date FROM sql_query WHERE sess_no = p_sess_no ORDER BY create_date DESC; CURSOR c_iterator_inner IS SELECT query, identifier, create_date FROM sql_query WHERE sess_no = p_sess_no ORDER BY create_date; BEGIN SELECT query INTO final_raw_query from sql_query where create_date = ( select MAX(create_date) FROM sql_query); FOR r_iterator_outer IN c_iterator_outer LOOP dbms_output.put_line ('outer query:' || r_iterator_outer.query || ' identifier:' || r_iterator_outer.identifier); FOR r_iterator_inner IN c_iterator_inner LOOP final_raw_query := REPLACE(r_iterator_inner.query,'('||r_iterator_outer.identifier||')','('||r_iterator_outer.query||')' || ' ' || r_iterator_outer.identifier); UPDATE sql_query SET query = final_raw_query WHERE create_date = r_iterator_inner.create_date; dbms_output.put_line ('inner query' || final_raw_query); COMMIT; END LOOP; END LOOP; END; / |

There may be a more elegant way of doing this but this seems to work for the moment. Originally I had thought of using the model clause for this but then noticed that this does not support CLOBs.

Step 6: Execute complex query and insert transformed data into target table

In this step we grab the transformed and substituted complex query from our table and execute it.

Command on Source

|

1 2 3 4 5 6 7 8 9 |

SELECT NVL('<%=odiRef.getTargetTable("TABLE_TYPE")%>','Yellow') AS table_type, -- Metalink 423909.1 Edit odiparams or use jdbc driver 5 or 6 query AS query FROM sql_query WHERE sess_no = <%=odiRef.getSession("SESS_NO")%> AND create_date = ( SELECT MAX(create_date) FROM sql_query WHERE sess_no = <%=odiRef.getSession("SESS_NO")%>) |

In the Command on Source we retrieve the complex SQL query from our database table.

As we are dealing with a CLOB here we first need to make some changes to the odiparams.bat file. Alternatively you can use version 5 or 6 of the JDBC driver (Metalink 423909.1). I have outlined in a previous post how to achieve this.

If we don’t want to install a new JDBC driver we need to add the following line to the odiparams.bat

set ODI_ADDITIONAL_JAVA_OPTIONS= “-Doracledatabasemetadata.get_lob_precision=false”

You will need to restart the Designer module for this to take effect.

Command on Target

|

1 2 3 4 5 6 7 8 9 |

BEGIN IF '#table_type' = 'T' THEN INSERT INTO <%=odiRef.getTable("L","TARG_NAME","A")%> (<%=odiRef.getColList("", "[COL_NAME]", ",nt", "", "((INS and !TRG) and REW)")%> <%=odiRef.getColList(",", "[COL_NAME]", ",nt", "", "((INS and TRG) and REW)")%> ) #query; COMMIT; END IF; END; |

Note that we need to select the Ignore Errors checkbox. Otherwise the KM will fail at this step for the subqueries stage. A better approach for this may be to use Jython and pass the #query variable into a stored procedure that executes it as dynamic SQL.

Currently I use an INSERT statement for loading the target table. In a future version of this KM I also intend to add a MERGE statement as an option.

Step 7: Write complex query to Operator module

In a final step we write the complex query to the Operator. We can then easily debug any issues with it.

Command on Source

|

1 2 3 4 5 6 7 8 9 |

SELECT NVL('<%=odiRef.getTargetTable("TABLE_TYPE")%>','Yellow') AS table_type, -- Metalink 423909. Edit odiparams or use jdbc driver 5 or 6 TO_CHAR(query) AS query FROM sql_query WHERE sess_no = <%=odiRef.getSession("SESS_NO")%> AND create_date = ( SELECT MAX(create_date) FROM sql_query WHERE sess_no = <%=odiRef.getSession("SESS_NO")%>) |

Command on Target

|

1 |

raise("""INSERT INTO <%=odiRef.getTable("L","TARG_NAME","A")%>(<%=odiRef.getColList("", "[COL_NAME]", ",nt", "", "((INS and !TRG) and REW)")%> <%=odiRef.getColList(",", "[COL_NAME]", ",nt", "", "((INS and TRG) and REW)")%> ) """ + """#query""") |

The subquery knowledge module in action – Two examples

Let’s now have a look at our new Knowledge Module in action. For this purpose we will use tables from the SH sample schema.

Example 1: Subquery with analytic function

In this example we want to retrieve the date on which the amount sold for a customer was the greatest. One way of achieving this is to use the ROW_NUMBER() analytic function in a subquery to rank the amount sold and then filter the Top 1 record from this subquery. So the query would look similar to the following

|

1 2 3 4 5 6 7 8 9 |

SELECT CUST_ID AS CUST_ID, TIME_ID AS TIME_ID, AMOUNT_SOLD AS AMOUNT_SOLD, row_number() OVER (PARTITION BY CUST_ID ORDER BY AMOUNT_SOLD DESC, TIME_ID DESC) AS RN FROM sales ) SQ1 WHERE SQ1.rn = 1 |

We will now generate this query with our new knowledge module.

First of all we will generate a temporary interface for the subquery with the analytic function in it.

Select the Staging Area Different From Target checkbox and from the dropdown select the schema you want to execute this in.

In the Target Datastore click on Untitled. Type SQ1 as the Name for the target datastore and select the Data schema radiobutton.

Drag the sales table from the SH model to the Sources area (you first need to reverse engineer this from the SH schema).

Drag and drop the columns CUST_ID, TIME_ID and AMOUNT_SOLD from the source datastore to the target datastore.

Right click inside the Target Datastore area and select Add a column

Name the new column rn and type in the value for the analytic function:

row_number() OVER (PARTITION BY SALES.CUST_ID ORDER BY SALES.AMOUNT_SOLD DESC, SALES.TIME_ID DESC)

Go to the Flow tab and select the custom subquery knowledge module.

That’s it, we have created the yellow interface for our subquery.![]()

In a next step we now need to create the interface that takes the sales_amount yellow interface as its source and loads the target table.

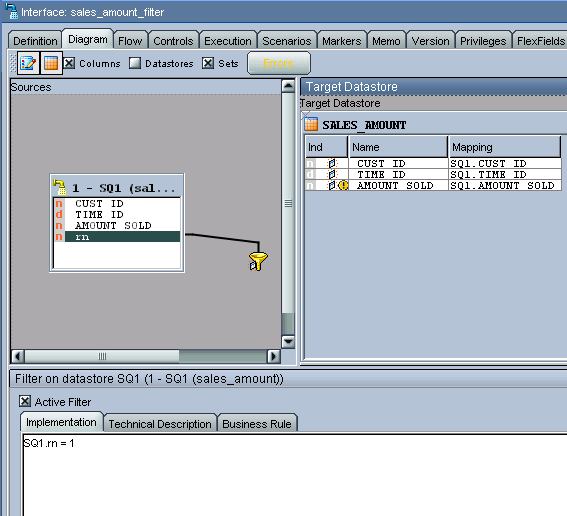

Create an interface, name it sales_amount_filter, drag and drop the sales_amount interface to the Sources area, and create a filter as per screenshot below.

We need to create a table for our target datastore. Use the script below to create this table, reverse engineer it and drag and drop it to the target datastore area.

|

1 2 3 4 5 6 |

CREATE TABLE SALES_AMOUNT ( CUST_ID NUMBER, TIME_ID DATE, AMOUNT_SOLD NUMBER ); |

Next move on to the Flow tab and select our custom subquery knowledge module.

We now need to bring the two interfaces together in a package and then we are ready to load our target.

Execute the package and then switch to the Operator module. You should get something similar to the screenshot below.

Let’s have a look at the execution of the individual steps. We’re particularly interested in the subqueries and the final complex query. So let’s have a look at the debug steps where these will show up.

Below we see the query for the temp interface that contains the analytic function.

Next we’ll have a look at the query that is generated for the second (non-temp) interface.

As you can see from the figure above, the query selects from the SQ1 target table of the temporary interface.

We substitute SQ1 in step 12 of the package with the SQL query of the analytic function and then use this complex query to insert into the target table.

Example 2: Joining two subqueries

In the next example we take this a step further and join another subquery to the package from example 1.

We first create another subquery using a temp interface. As you can see from the figure below we aggregate the amount_sold by cust_id.

We then take this temp interface and the temp interface from example 1 and join these together on the cust_id in yet another interface.

The script for the target table sum_sales_amount is as follows

|

1 2 3 4 5 6 7 |

CREATE TABLE SUM_SALES_AMOUNT ( CUST_ID NUMBER, TIME_ID DATE, AMOUNT_SOLD NUMBER, SUM_AMOUNT_SOLD NUMBER ) |

Next we stick the three interfaces into a package.

… and execute it.

From the Operator module we can see the generated SQL.

Conclusion

In this article I have shown you how you can execute complex queries and subqueries in ODI without loss of data lineage and without suffering performance degradation. So far I have not used the knowledge module in a production environment. So I am sure there is still room for improvement. However, from now on I will use and further develop this approach to meet complex transformation requirements in ODI.

Any input highly welcome. Also let me know of bugs that you come across or if you find that any of the above is unclear.

In order to master scripting in ODI I recommend the following books.

Java BeanShell

Scripting in Java: Languages, Frameworks, and Patterns

Jython

The Definitive Guide to Jython: Python for the Java Platform.

Jython Essentials (O’Reilly Scripting)