Build a Predictive Model on Snowflake in 1 day with Xpanse AI

Intro

“Building a Churn Model is at least 3 months of work”

You are the Data Analytics Manager and you have successfully implemented your Snowflake data warehouse. Great news! You deserve a pat on the back 🙂

Thanks to Business Intelligence and Data Warehousing you can easily identify problems in your business. For instance, your shiny new dashboards show that a significant number of customers are churning and switch over to the competition. While it’s critical to know those numbers – BI can’t tell you why you have a problem or how to fix it.

This is where we need to go beyond traditional performance analytics and look at techniques from Statistics, Data Mining, and Data Science. In this particular case you want to identify those customers that are likely to churn in the near future so you could direct a retention campaign at them.

But how do you build a Churn Prediction Model? Doesn’t this take a very long time?

We need to:

Table of Contents

- find the relevant data

- prepare the data for for Machine Learning

- train the Machine Learning models

- evaluate them

- implement the model.

- organise the ETL so the model can score the customers.

This sounds like a daunting task and the last time you’ve watched a project like that – it took 3 months and 2 Data Scientists to deliver a Churn Model.

In this blog post we show you how you can automate the whole process with the Xpanse AI platform and deliver a Churn Model in 1 day.

Even better, we will deploy our Machine Learning model directly in the Snowflake database.

Yes, that’s right. To score your customers for their churn risk, your data does not have to leave the Snowflake database. You can score records directly in Snowflake and take advantage of its linear scalability to meet any SLAs.

9:00am – Eyeballing the Data.

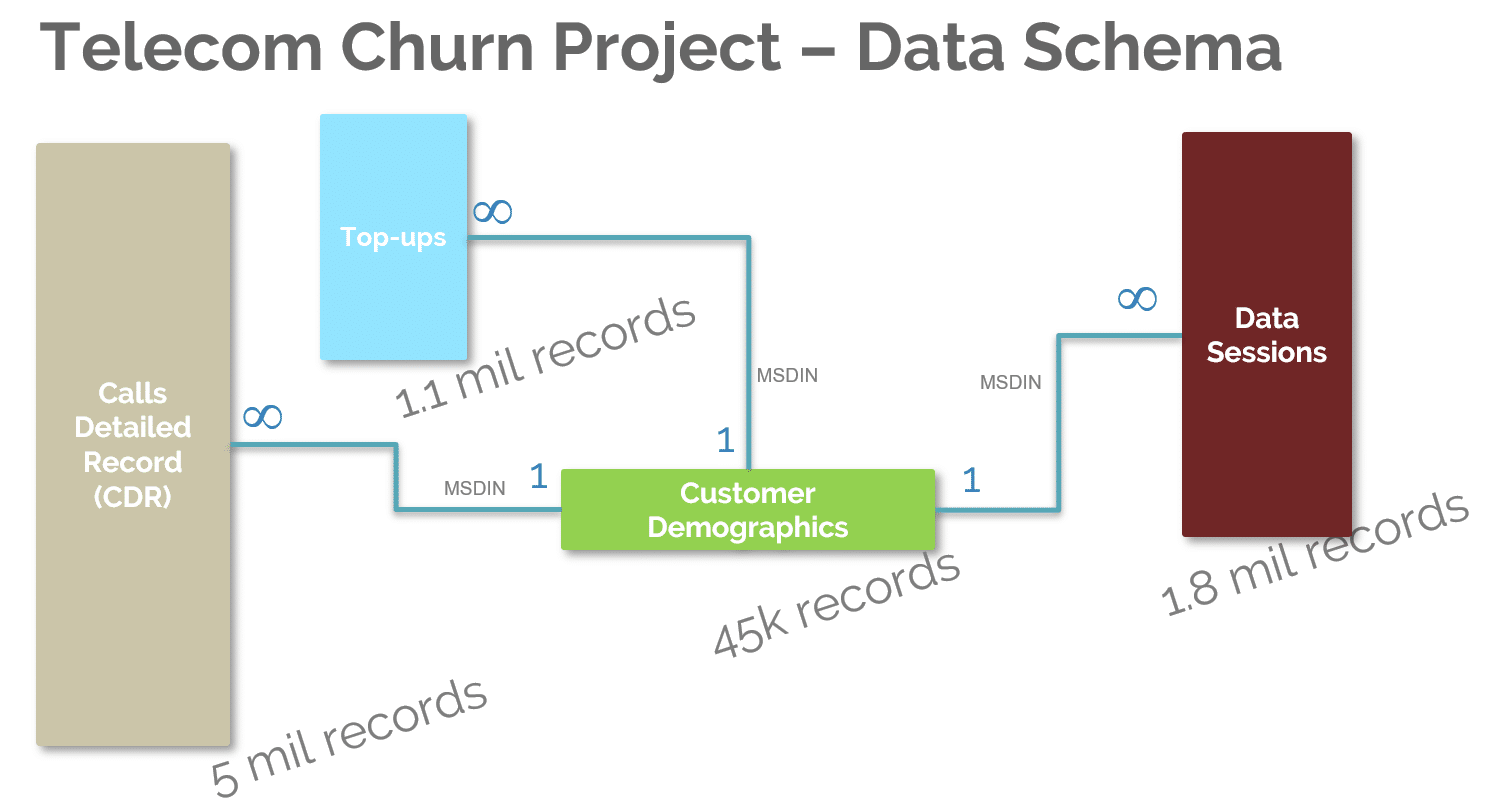

Our client is a EU based telecom with the Data Warehouse built in Snowflake. For this project we chose several main tables:

- Call Detailed Record (CDRs) contains detailed call-by-call record of the phone usage. CDRs are usually the largest tables in the telecoms.

- Data Sessions – a per-session record of uploads and downloads made by Subscribers.

- Top-Ups – a history of topping up the phones.

- Customer Demographics – a more static set of key descriptors for the Subscribers.

Creating good Target tables might be a challenging task – depending on the business problem and the available data. This time we were quite lucky with a very clear record of when customers switched their phone numbers from one operator to another.

The Problem

“Machine Learning digests only flat Modelling Dataset – not the data as it’s stored in the database”

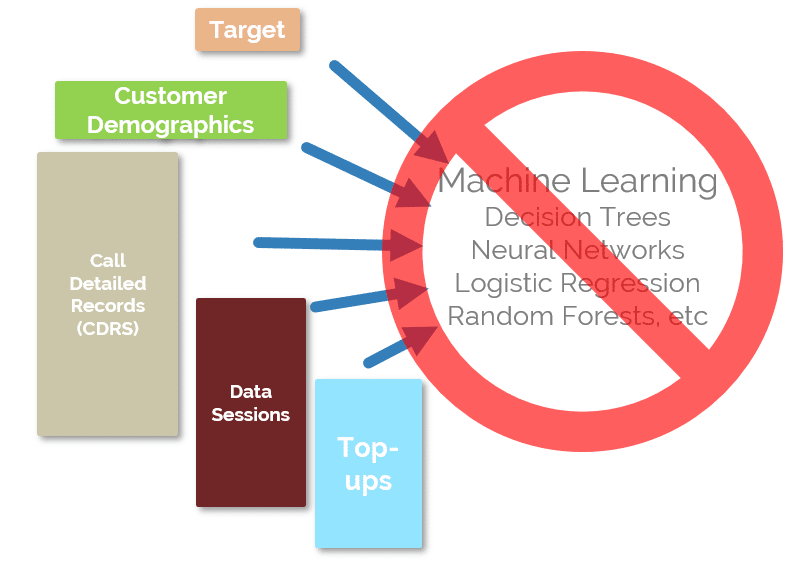

After eyeballing the data, it became clear that it cannot be immediately used to build a Churn Model. Bummer.

You see – at the core of Predictive Analytics sit Machine Learning algorithms – such as Decision Trees, Logistic Regression or Random Forests.

Unfortunately, they accept the input data in a very specific format and they will not accept the raw non-aggregated information extracted from the database.

What Machine Learning needs – is a flat, very wide table containing many aggregates called Input Columns or Features.

Such a table needs to be built for every project by designing hundreds or even thousands of SQL queries.

Someone has to do it and this someone is typically a Data Science team, which we currently don’t have.

Fortunately I have a rough idea what needs to be done – so I can attempt to do it myself.

10:00am – Manual Data Prep Attempt

“The feature space is infinite”

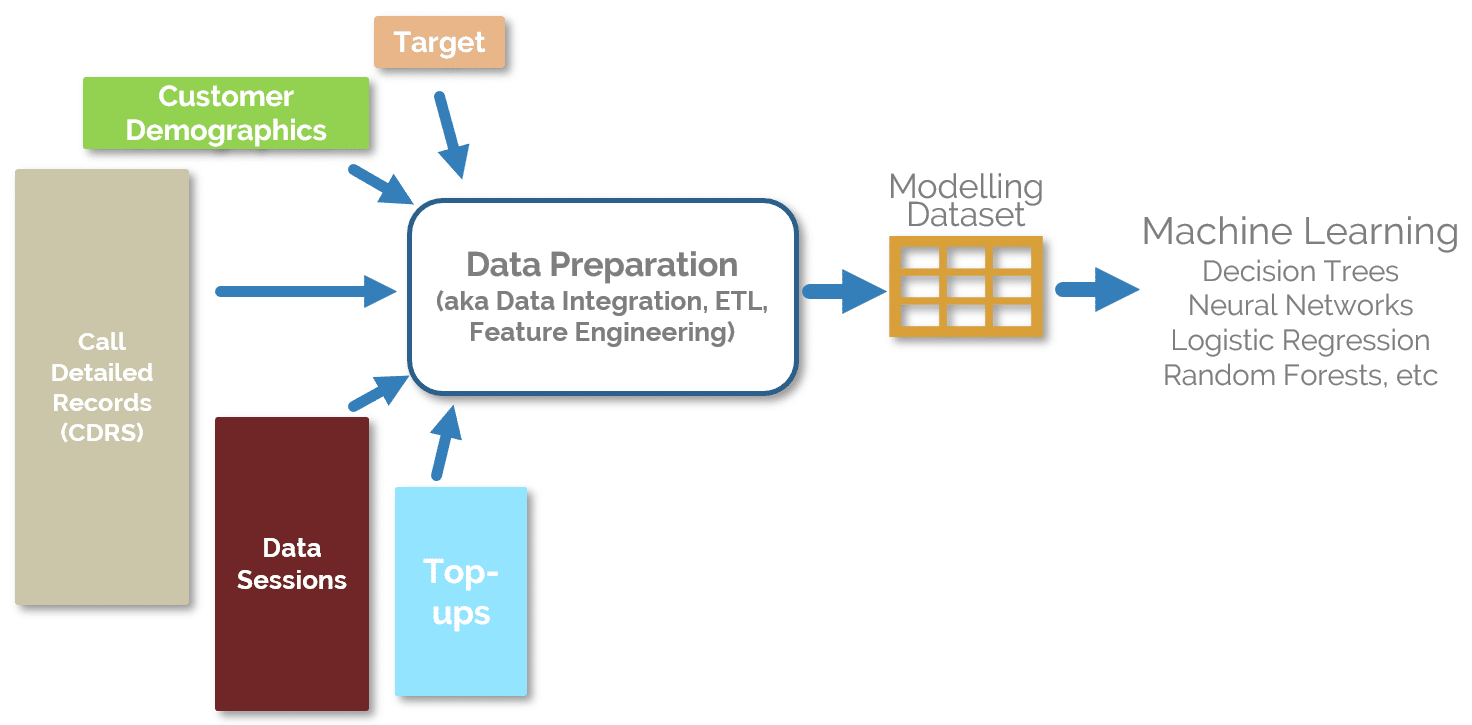

As you probably have heard many times – 70% to 80% of the Predictive Modelling project time is spent on Data Preparation.

This is exactly the process of transforming data from the “database” structure to the “Machine Learning” friendly format.

It takes a lot of digging around the data trying to understand the meaning of the columns and their content to come up with the design for Modelling Dataset.

As an example – this is how a Data Scientist would approach it when delivering the project in the traditional way:

“Looking at the table Call Detailed Records it would be good to create an aggregate (aka an Input Column or a Feature) which would describe the Number of calls made by each Subscriber in 30 days prior to Churn.”

A sketch of the SQL to create a Feature like that could look as follows:

|

1 2 3 4 5 6 |

SELECT msdin, COUNT(msdin) FROM cdrs RIGHT JOIN target_table ON cdrs.msdin = target_table.msdin AND cdrs.call_dt < target_table.timestamp AND cdrs.call_dt > (target_table.timestamp – 30) GROUP BY msdin |

This is merely a starting point.

Why only “in 30 days prior”? Why not 1 day? Or 365 days?

How about calculating only the number of outgoing calls?

What about an average duration of the call?

There is virtually an infinite number of aggregates we can derive just from any given source table. The more Features we build – the higher the chances for improving the Model’s accuracy. Also – the more time we spend on this – the higher the cost of the Project and the more outdated Model built as a result.

We have an ambitious plan to deliver the Churn Model to our Client by the end of today. No time for manual Data Preparation.

We will try a new tool on the market – Xpanse AI, which promises to do all that work automatically. We will see.

11am – Exporting the Data and Uploading to Xpanse AI

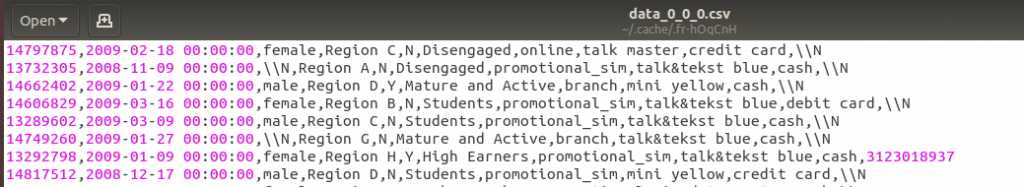

Xpanse AI ingests the data in the CSV format, so as the first step we need to export tables from our database.

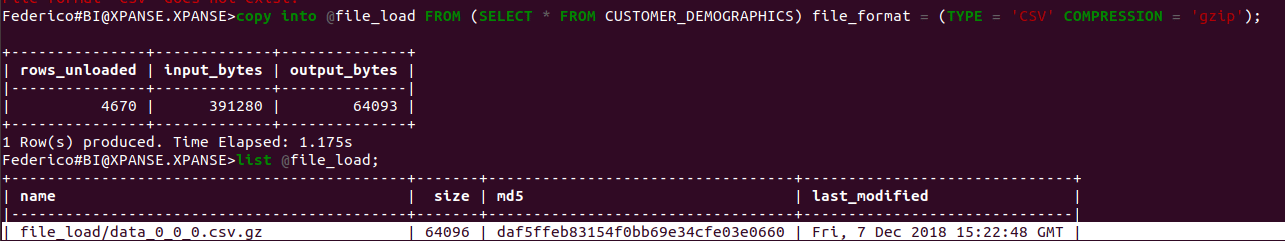

Step 1: Export the Data to CSV files

To start the unloading process from our SF database, we will copy the required data from the database tables into CSV files.

To do that, run the following:

|

1 |

COPY INTO @<SF_stage> FROM (SELECT * FROM <table_name>) file_format = (TYPE = 'CSV' COMPRESSION = 'gzip'); |

For instance:

At this point, as shown in the image above, executing the LIST @<SF_stage> statement will display the newly unloaded file.

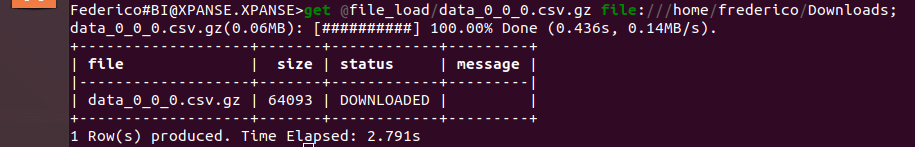

Once you are through, just download it in the chosen local directory:

|

1 |

GET @<SF_stage>/<unloaded_csv_file> FILE:///<path_to_local_directory>; |

(Tip: Snowflake also supports regular expressions)

Now your data are successfully exported!

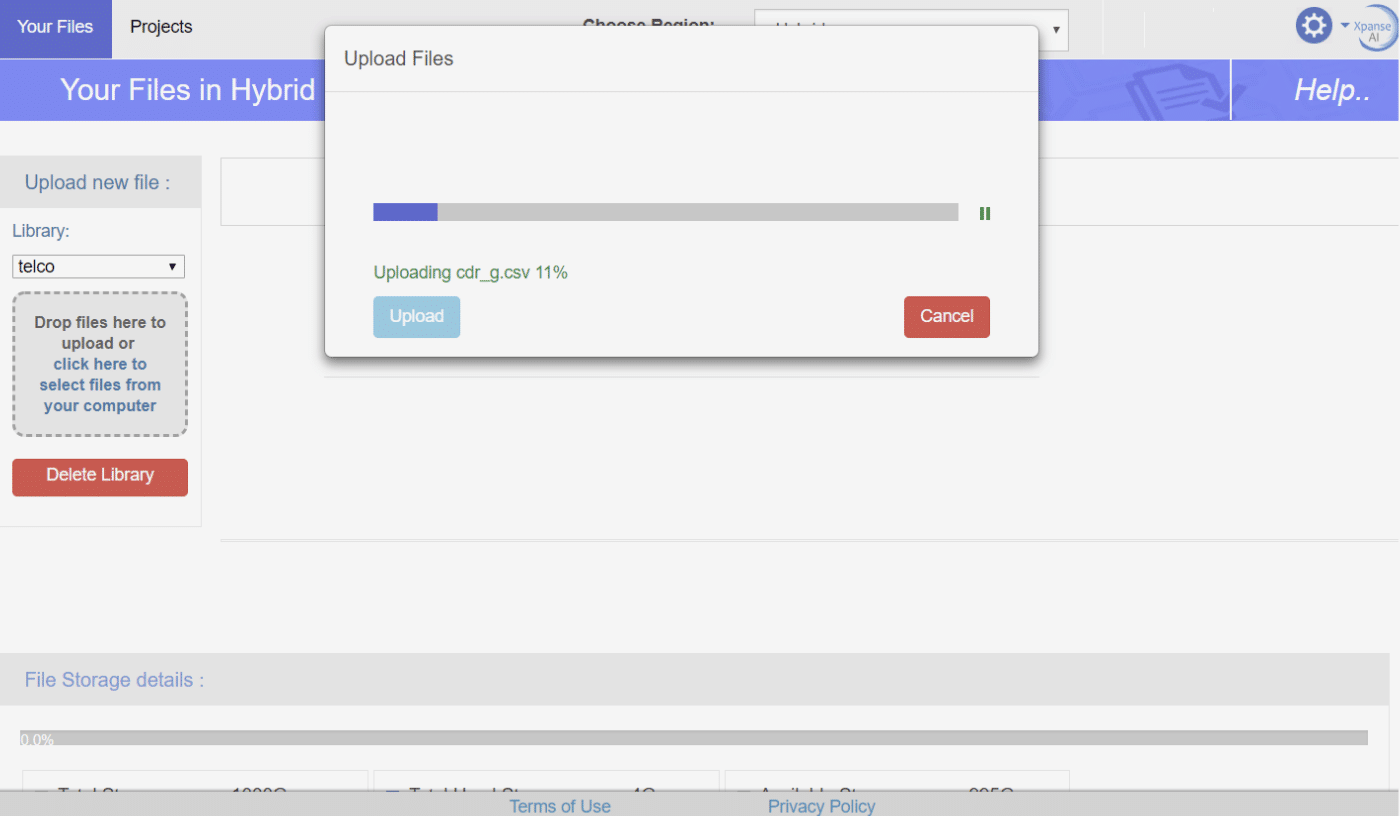

Step 2: Upload CSV files to Xpanse AI.

That took around 30 minutes.

12:00pm – Setting Up a Project

There 5 steps we need to go through to get the Model with Xpanse AI.

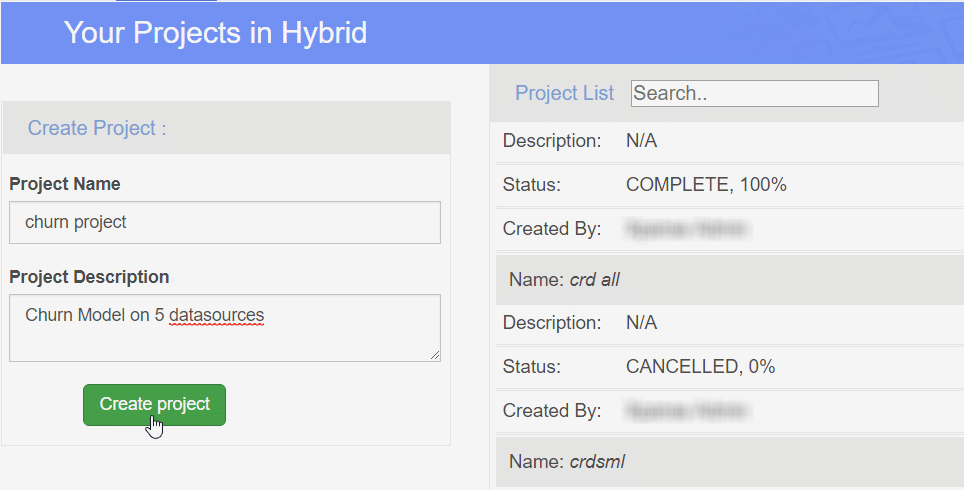

Step 1: Create a new Project

We need to create a new Project which will contain the entire Predictive Analytics workflow.

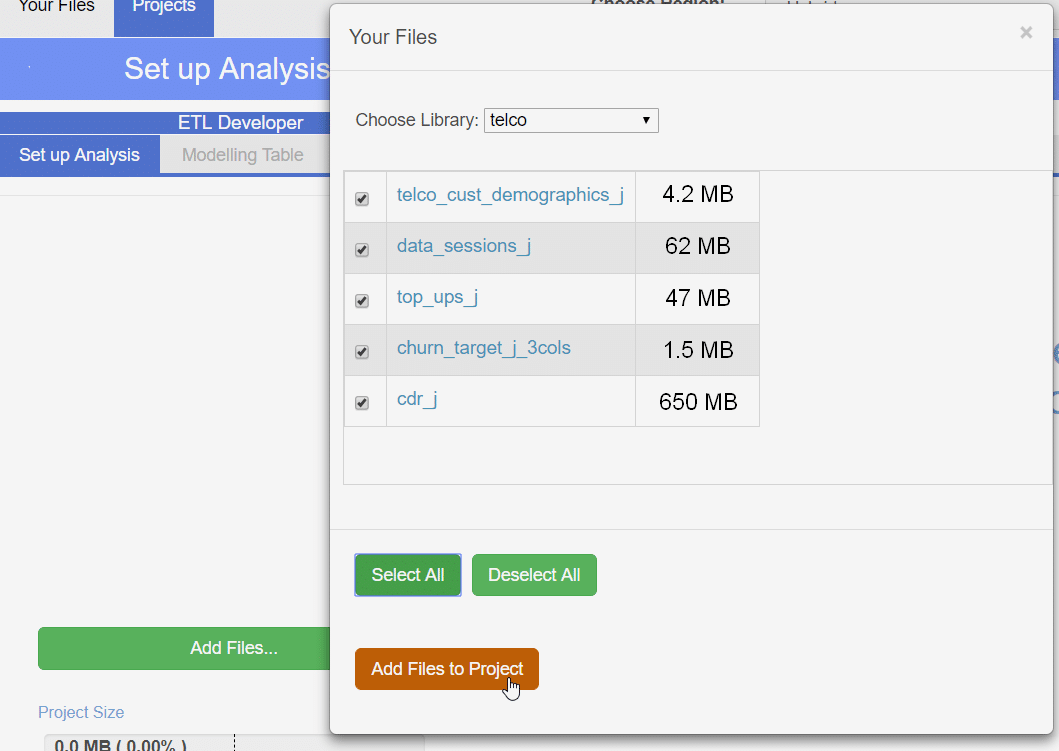

Step 2: Add source data to the Project

The UI allows to quickly add the required data to the Project:

Pick the tables you want to use on a project

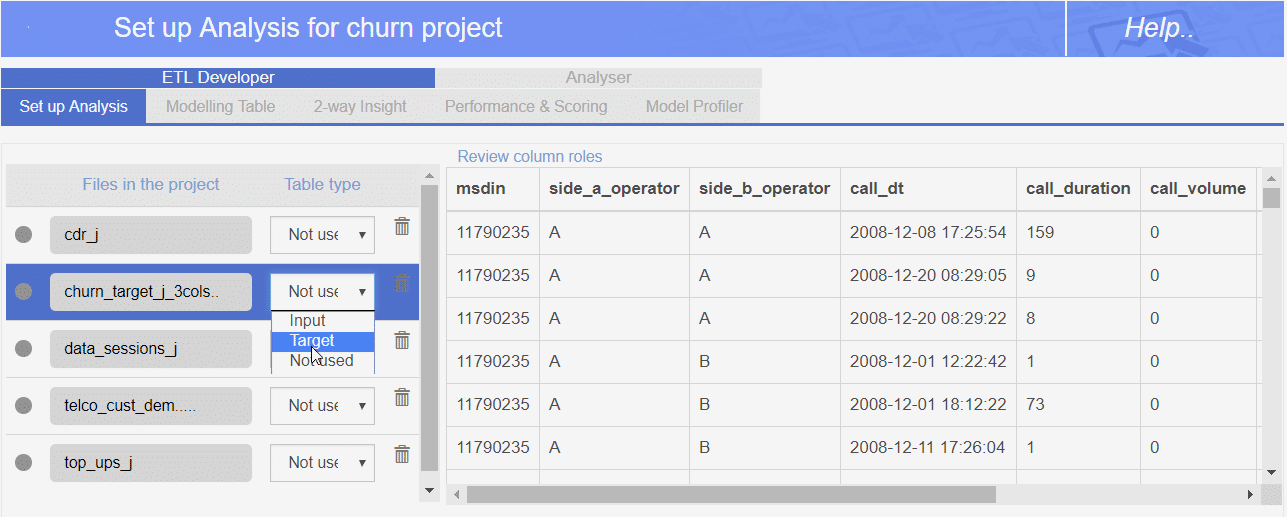

Step 3: Choose the roles for each table.

We need to choose which table is Input and which table contains the Target.

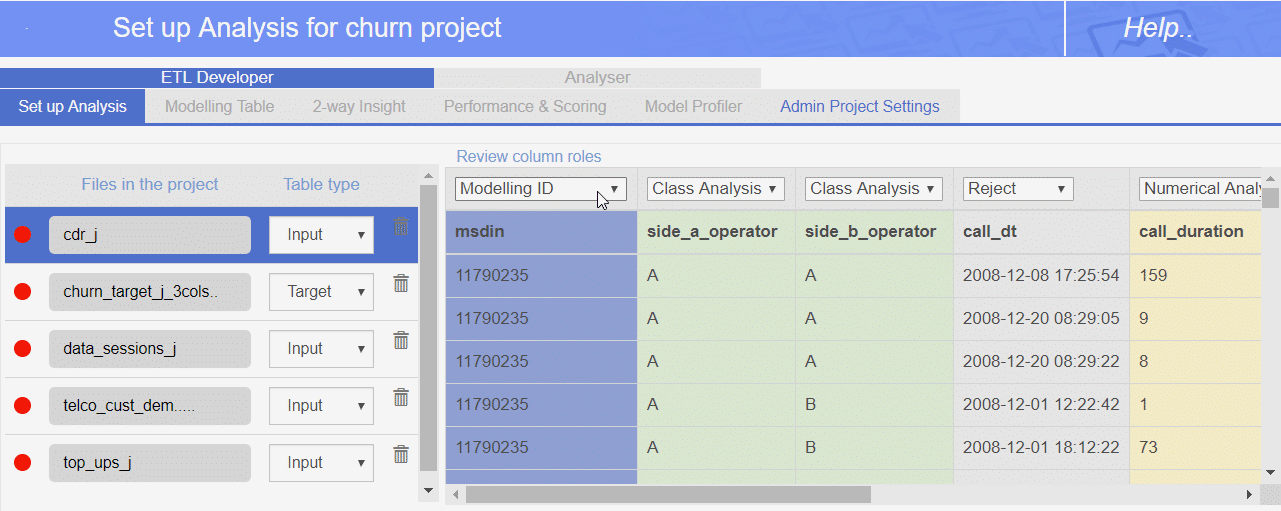

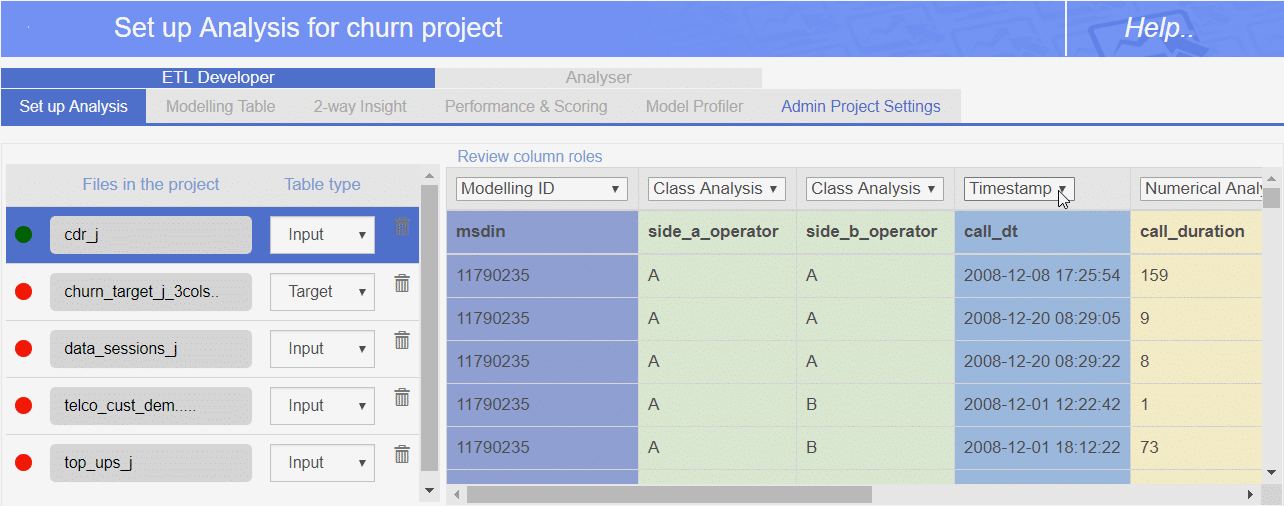

Step 4: Choose Modelling ID and Timestamp

Here comes handy a basic knowledge about the underlying data model.

Each table contains information about Subscribers who are identified by their phone numbers.

We need to point out to Xpanse which column contains this identifier. In our case it’s the column named “msdin”.

The way to do it is to choose the role for that column as “Modelling ID” – which essentially means a “linking key”.

Similarly – for each table we need to specify which column contains the timestamp for the record.

Knowing the exact time of when the information was generated is critical during every Predictive Modelling project and using non-timestamped data introduces the risk of obtaining a wrong model.

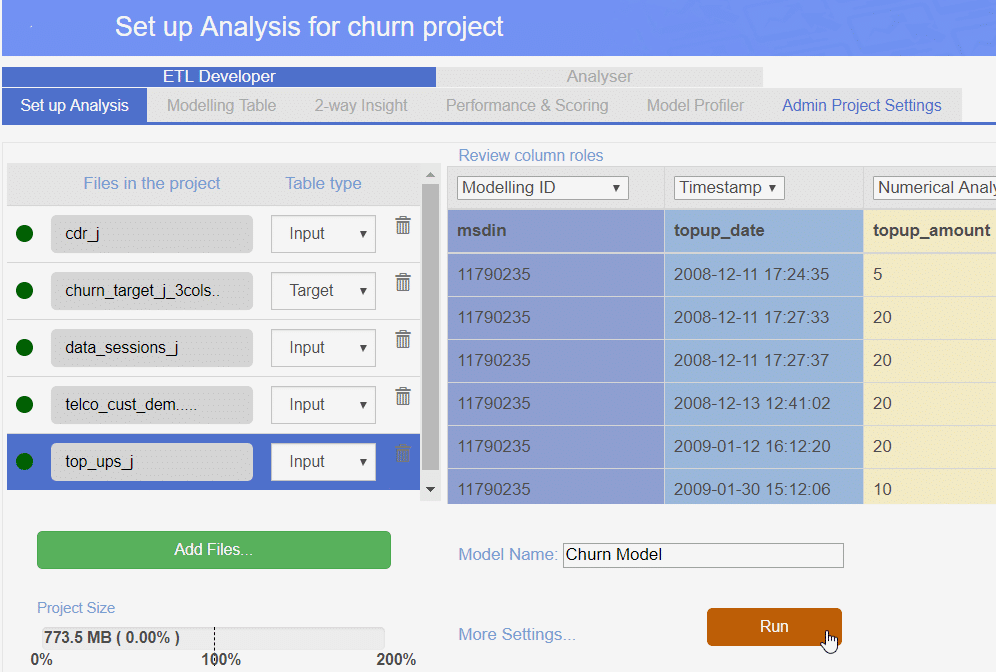

Step 5: Kick off the Project

All we have left is to name the Model and hit Run.

That’s it.

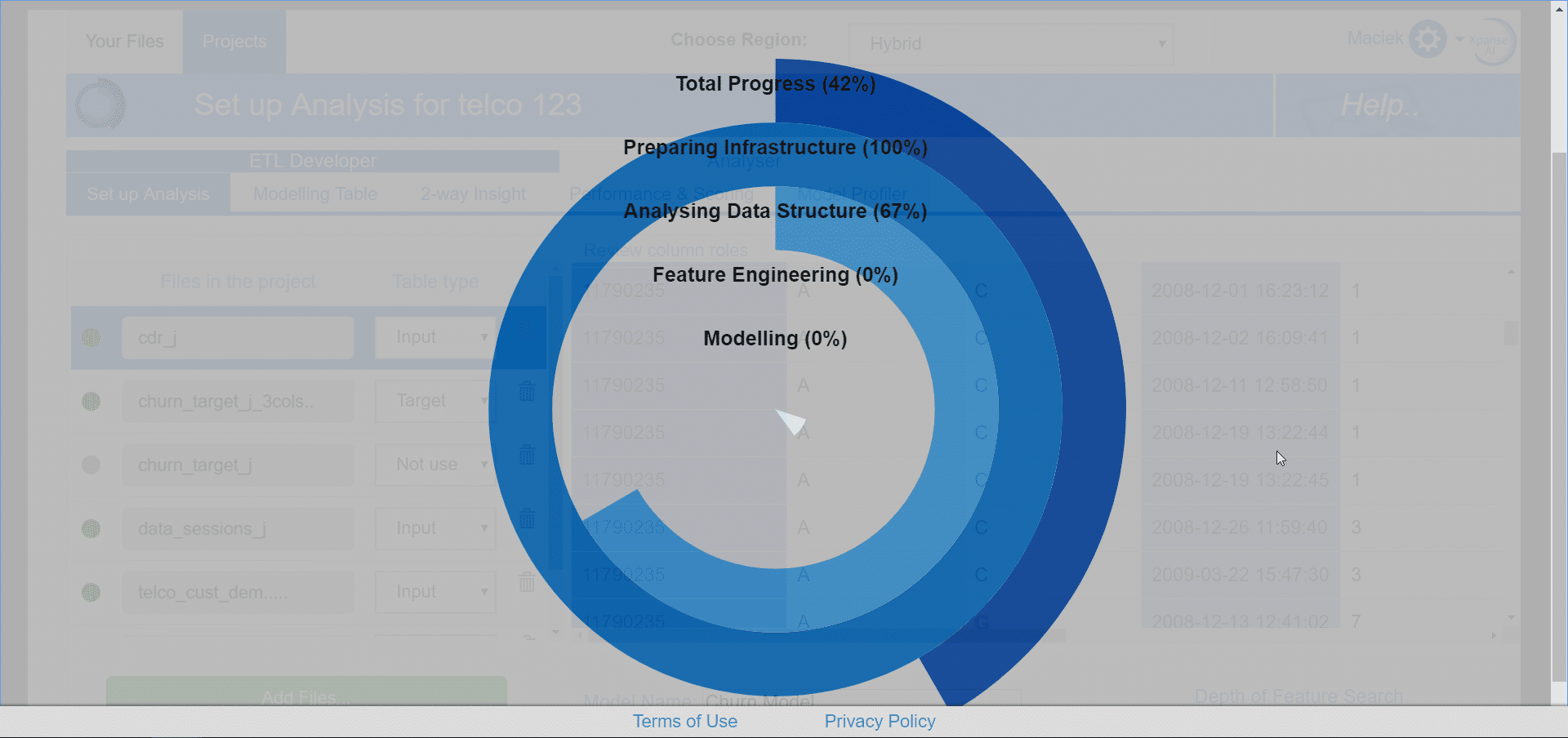

Xpanse AI will:

- analyse the structure of the data

- transform the 5 source tables into the Machine Learning – friendly format

- train the ML models

It will take a bit of time and since there is not much else we can do – it’s a good time for a lunch break! 🙂

12:30pm – Lunch Break

Classic chicken curry with rice & chips mix and a salad.

2:00pm – Reviewing Project Outputs

We are back at our desk and the processing is already finished. We are interested in 3 main outputs:

- Modelling Dataset

- Predictive Model

- Scoring Code

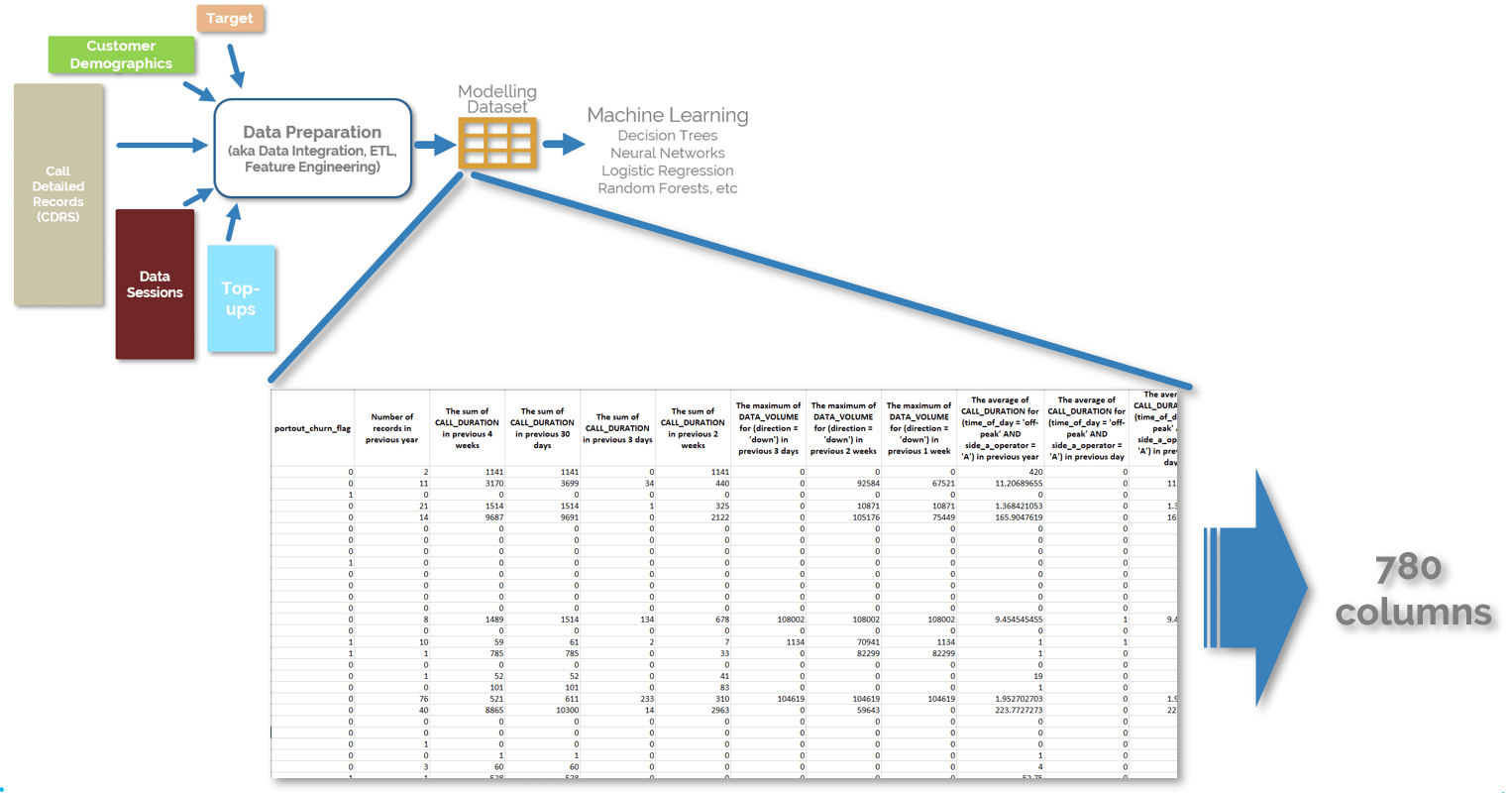

Modelling Dataset

Firstly – let’s take a look at the Modelling Dataset.

Xpanse AI aggregated all 5 tables into one flat Modelling Dataset with 780 columns.

Those columns (aka Features or Variables) contain aggregated information on customers’ behaviours and it’s something that traditionally would require months of manual work.

It’s quite astonishing to see months of Data Engineering designed and executed by a machine within 1 hour.

This is exactly what Machine Learning algos need.

Predictive Model

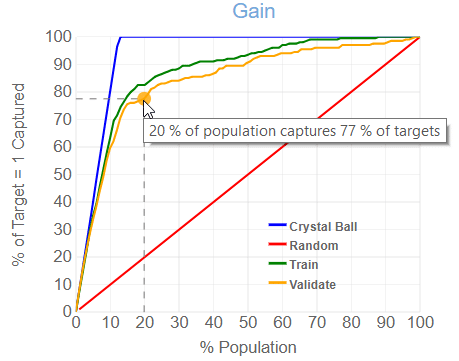

Let’s look what Machine Learning delivered. From the business perspective Models are evaluated based on their “Gain” or “Uplift”. Both are represented as charts and allow to assess how much better the Models are comparing to the random approach to targeting.

This is the Gain chart for our Model:

Reading of that chart is quite simple: as an example – if we target top 20% of risky customers – we will capture 77% of all churners. We can quickly check several cut-off points for the Retention campaign and decide how many customers we want to target with a retention offer.

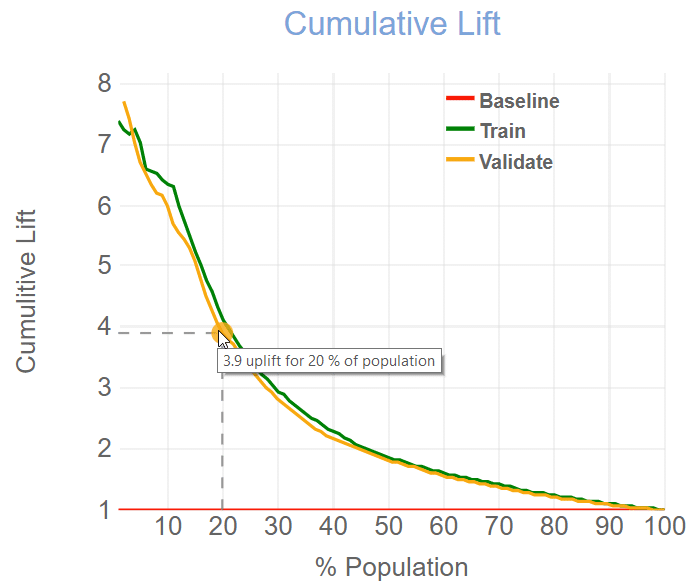

Another way of looking at Model’s accuracy is the Cumulative Lift chart:

If we were choosing the same 20% of our customer base to target with the Retention Campaign – we would “capture” 3.9 times more future churners comparing to a random targeting.

It’s a quite powerful model.

Time to deploy it.

“Deploying” the model means installing it in a place where it can “score” our customers for their churn risk on a regular basis.

Since our data resides in the Snowflake database – this is the best place to deploy the model.

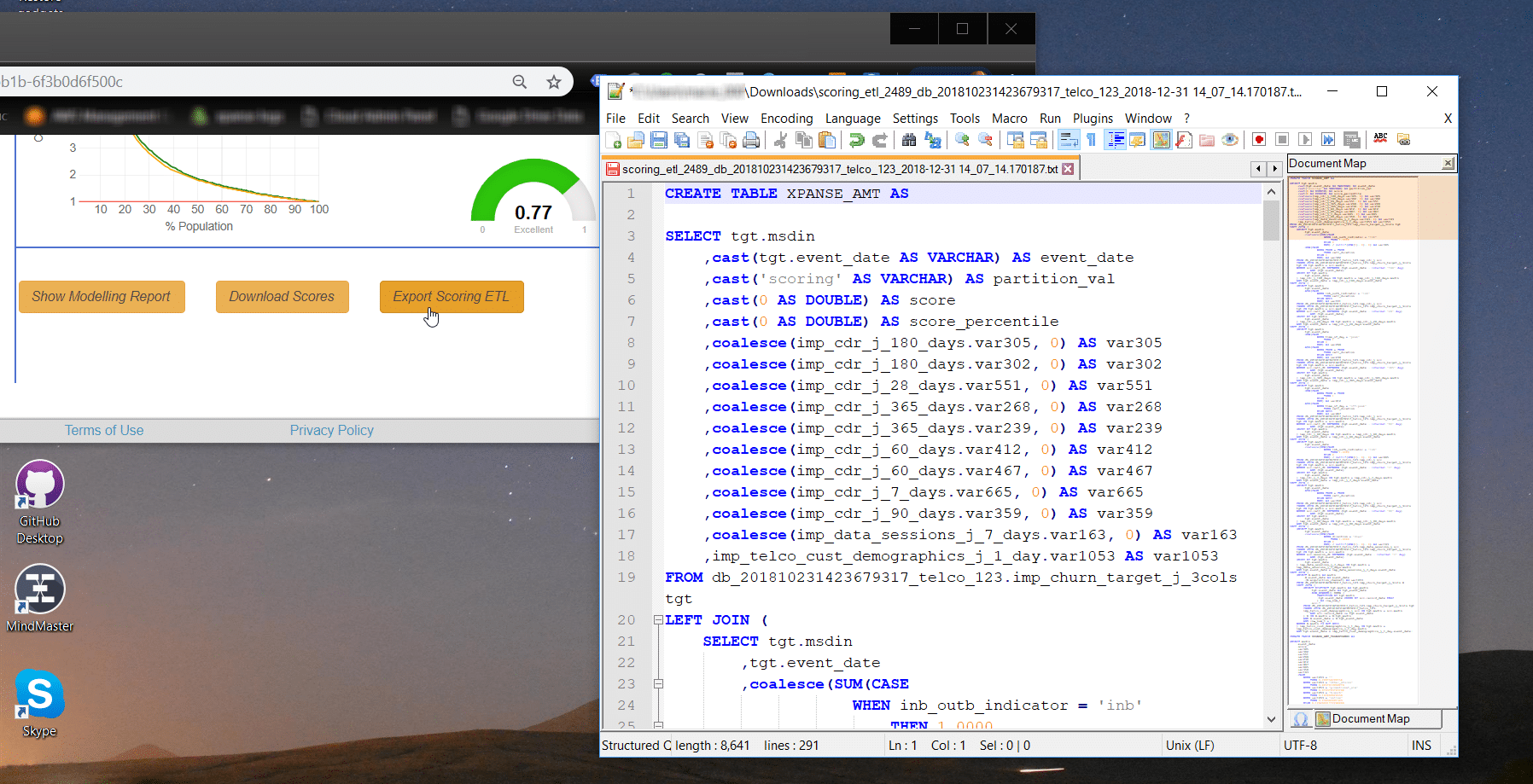

Scoring Code

Here is something that we truly love about Xpanse AI: You can export a deployable model as SQL code.

There are 2 SQL parts to it

- Scoring Table ETL – this code preps the data in our data warehouse to the scoring format

- Scoring Model ETL – this is an SQL representation of the Machine Learning model ready to generate propensity scores based on the data in the Scoring Table.

This makes the Model deployment a breeze comparing to other tools.

Whenever new data arrives, e.g. as part of our nightly batch load or even in real-time we can score the data to determine the likelihood of our customers churning by simply running those two SQL statements.

3:00pm – Deploying Scoring Model on Snowflake

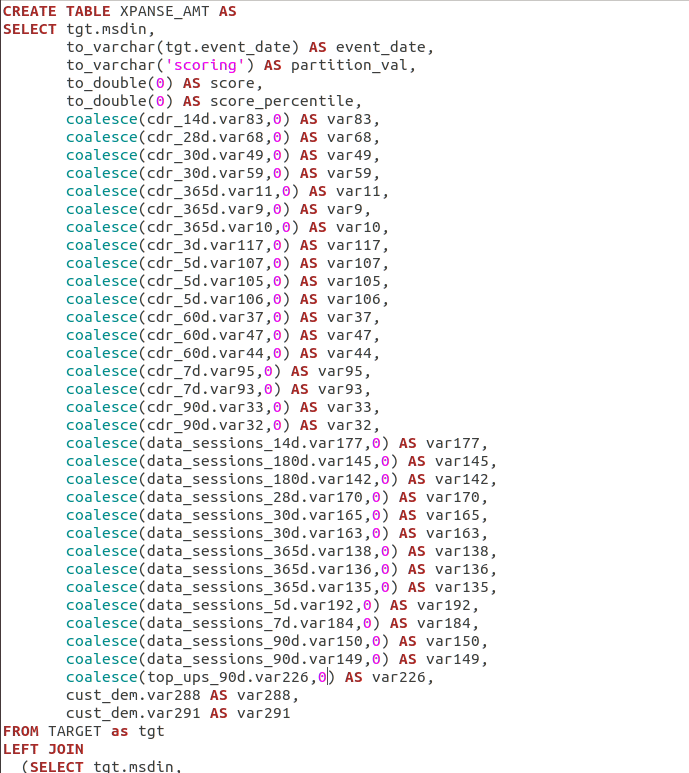

Let’s take the two SQL statements and create two scripts with CREATE OR REPLACE TABLE statements. The two scripts can be included in our nightly data flow and are executed after the main data warehouse model has been populated. A great tool for data orchestration is Apache Airflow, which we have written extensively about in other posts.

Looking at the scripts you can get a better understanding on what exactly is going on. The model is fully transparent. That’s another nice side effect. You can exactly follow the logic of the churn score for your clients.

4:00pm – Test Run

Ok. Ready to rock. Let’s execute the two scripts and look at the results.

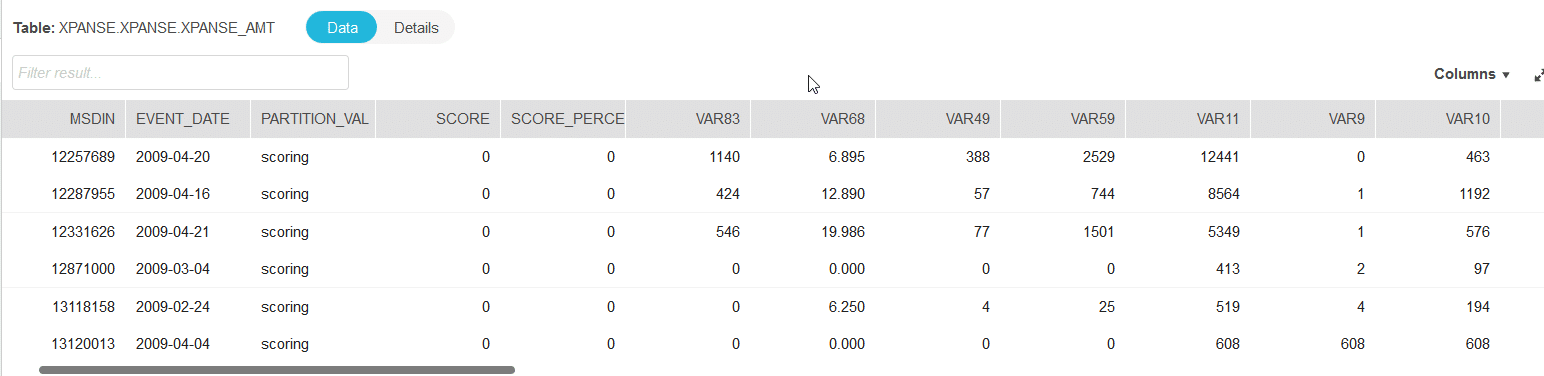

Script number 1 puts the data into the required format for scoring against our model. Let’s have a look at the output.

As you can see, this script has generated the features required by our model, which can be identified by column name VAR and a sequence number. Please also note the default Score of 0. The score will get updated in our next step when we determine the probability of our clients to churn against the model

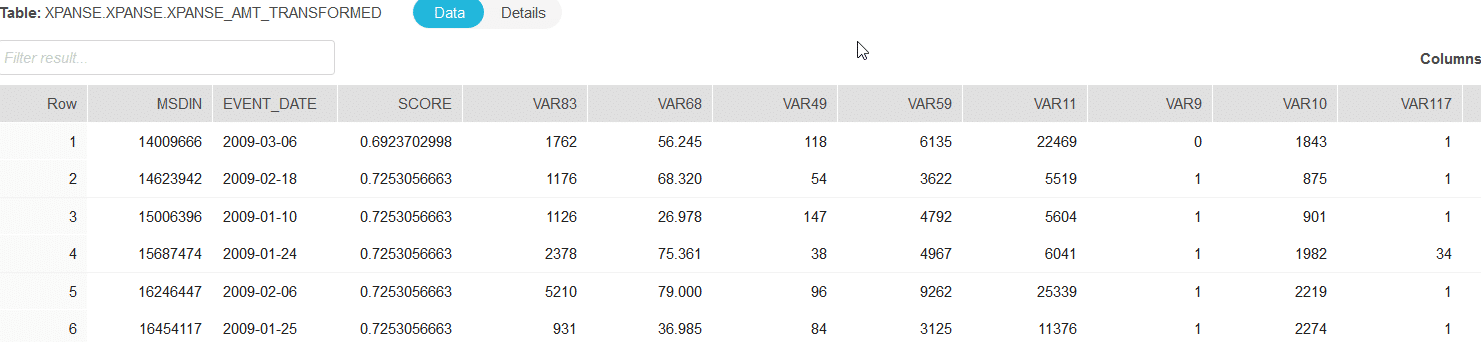

So let’s feed the features into our model and run the script number 2.

You can see that the score has now been populated.

The higher the score the higher the likelihood that a particular customer will churn.

Great stuff, all done!

We can now feed the information of likely churners to our sales team who can take action by making the churn candidates some special offer. As we all know, any analytics project without the appropriate action is pointless.

5:00pm – Guinness in a Pub

Let’s briefly recap what we did before we enjoy the black stuff.

In a first step we exported the training data from our Snowflake data warehouse to the Xpanse platform

Next we gave Xpanse some information on where in the data set it can find the target variable and timestamp information.

In a third step the platform generated the Modelling Dataset and trained the Predictive Model. Xpanse also generated the entire scoring ETL flow as a SQL script.

In the next step we deployed the scoring SQL scripts into our data warehouse and scheduled them for execution as part of our nightly ETL.

In the last step we scored the likelihood of our customers to churn.

All in one day.

Sláinte

Enjoyed this post? Have a look at the other posts on our blog.

Contact us for Snowflake professional services.

We created the content in partnership with Snowflake.