JSON. To ETL or to NoETL? The big data question.

NoETL. The little brother of NoSQL

You have probably come across the term NoSQL. It was coined a few years back to describe a class of database systems that can scale across a large number of nodes for distributed (and sometimes global processing) of transactions (OLTP). Very early technologies were DynamoDB and Cassandra. These technologies trade in scalability for consistency (no strong read consistency and no transactional consistency). Over time the term was overloaded to mean any non-relational datastore. I have even heard people refer to Hadoop as NoSQL. The term NoSQL is unfortunate and a lot of people find it confusing. It actually has nothing to do with the SQL query language. In fact, many NoSQL databases support SQL.

Then there is the term NoETL. You might have come across it in recent conversations, presentations, or articles. It was coined in analogy to NoSQL. If you think that NoSQL is confusing then you are probably glad to hear that you are not alone.

I was intrigued to find out more when I first came across the term. NoETL? No more tedious integration and cleansing of data from different data siloes. Data heaven. This sounds too good to be true… and you can bet it is. When I dug a bit deeper into the concept I quickly learned that the idea of NoETL was only defined for a very very narrow set of data integration problems: the need to convert semi-structured data formats (in particular JSON) to a relational format. A lot of hype was generated around the ability to query JSON with SQL and most databases now have SQL extensions to query JSON.

That all sounds great at first glance but wait until you have heard the full story. Let’s dig a bit deeper into some of the problems you will encounter.

The data model is treated as a blackbox

It is relatively easy to read and understand a database model aka an ER diagram. Relationships between tables and entities are straightforward. This is not the case with a hierarchical document store such as JSON. Relationships are hidden away in arrays and complex nested structures. The more complex the JSON structure, the harder it is to understand.

By storing JSON in the database in a table column, we lose all of this information. Not only do we lose information on the relationships between the tables we also lose information on which fields are mandatory and which are optional.

Development overhead

Each time developers or analysts query JSON data in the database, they have to write awkward code to unnest the data from the JSON structures. This is quite error prone. Each analyst/developer has to write their own SQL to unnest the data, which is very repetitive. You could tackle this problem by creating views, but how do you make sure that they will be used. For complex JSON you would need to create a large number of views.

SELECT

t.weather[0][‘description’] AS weather_desc_1,

t.weather[1][‘description’] AS weather_desc_2

FROM dfs.default../apache-drill-1.0.0/sample-data/weather_14.json AS t

The more complex the JSON and the more levels of nesting we have, the more complex this gets. Very painful.

Each time data analysts query the data they have to rewrite this awkward SQL code

Querying reference data

JSON data often contains both transactional and reference data in the same file, e.g. measurements of temperatures in multiple cities across the globe. In order to just query the reference date and get a list of the cities we need to scan all of the transaction records as well (it depends on how the JSON file is constructed).

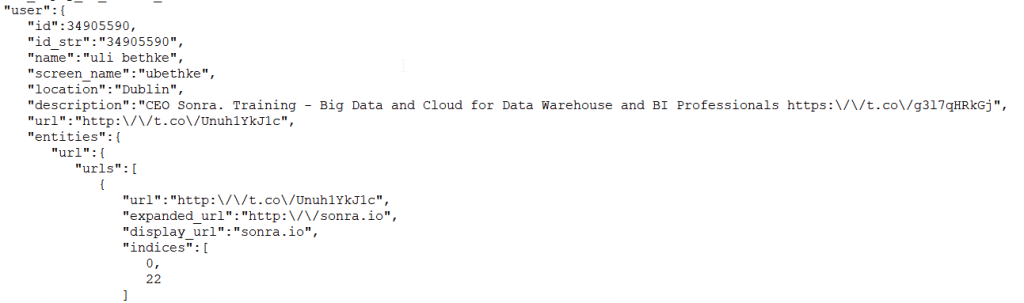

Let’s have a look at an example. In the example below, the name of the user who is tweeting is a reference data point (similar to a dimension attribute in a star schema). If I want to get a list of users who have tweeted, I need to go through all of the tweets. This is an expensive operation and quite unnecessary. It would be so much more efficient to either separate the reference data out into a separate entity or at least store the tweet together with reference data in columnar storage.

The problem gets even worse if we would like to join the user reference data to some other user data that is not in the tweet, e.g. a customer database.

Data quality

Without an ETL process, only very simple data quality defects can be handled. e.g. substituting one code for another code. If the quality issues are more complex, e.g. duplicated records or key violations then things get more complicated.

No ANSI standard for extensions

A smaller problem is that the various operators to work with JSON data in SQL have not found their way into the ANSI standard. Database vendors all have their own proprietary formats to query JSON. While the concepts are the same, the syntax between databases differs.

Performance

Let’s look at some performance considerations. Loading JSON data into an MPP database such as Redshift, Teradata, Vertica, Greenplum etc. doesn’t make much sense.

We can’t define a meaningful distribution key to properly distribute our JSON data across the cluster.

We can’t define sort keys to eliminate retrieval of blocks from disk when filtering. This results in completely unnecessary I/0.

We can’t columnar compress our data.

Joining data to other relational tables is painful and slow.

Parsing out data elements from JSON can be extremely CPU intensive. It might choke the cluster and steal resources from other more important queries. Have a read through this post by the guys from Amplitude

If you wanted to query on any user or event property, you had to use the json_extract_path_text function to parse the entire JSON and extract the specific property you were interested in. We observed that this json_extract_path_text function was consistently the most CPU-intensive computation on our Redshift clusters https://amplitude.com/blog/2015/06/05/optimizing-redshift-performance-with-dynamic-schemas/

Handling dynamic schema

One of the features of JSON is that the structure is flexible and as a result the schema is dynamic. Developers are free to add or remove fields. However, as the saying goes: one person’s gain is another person’s pain (at least I think it’s a saying). It is very hard for analysts to keep track of changes in a dynamic schema. Furthermore, many SQL engines don’t support dynamic JSON schemas https://dzone.com/articles/apache-drill-and-the-lack-of-support-for-nested-ar.

Heterogeneous types

JSON files normally don’t come with a schema. There is no XSD equivalent. As a result we can’t define data types and may encounter heterogeneous or mixed data types in our JSON files. SQL engines and databases often struggle to interpret the data correctly and fail the query.

Drill cannot read JSON files containing changes in the schema.

Complex JSON

The lights go out for complex JSON

Complex nested data is not handled at all or not handled well, e.g. in Drill Complex arrays and maps can be difficult or impossible to query. http://drill.apache.org/docs/json-data-model/#limitations-and-workarounds

Larger JSON files

You are out of luck if your JSON files are large.

Currently, Drill cannot manage lengthy JSON objects, such as a gigabit JSON file. Finding the beginning and end of records can be time consuming and require scanning the whole file. Workaround: Use a tool to split the JSON file into smaller chunks of 64-128MB or 64-256MB

Split them into smaller chunks. What happened to NoETL? I think enough said about it 🙂

What should I do with my JSON files?

Drop the whole idea of NoETL? There is one thing that tops NoETL. It’s called fully automated ETL.

You can easily automate the conversion of JSON to a relational schema with Flexter Data Liberator, our ETL tool for JSON and XML. Flexter addresses and fixes all of the issues I have outlined above. Take any number of complex JSON files and convert them to a relational database, tabular data in text, or Avro/ORC/Parquet on Hadoop.

Flexter analyses a statistically significant sample of your JSON files, generates an optimised relational target schema, generates all of the mappings, generates the parent-child relationships, and then auto-populates the target schema with source data. Who told you, you can’t have your cake and eat it.

You can run the conversion process in batch or streaming mode. We support schema evolution and you can view structural differences between different versions of your JSON files. The JSON files you feed to Flexter can have significant structural differences. We can still handle them.

If Flexter comes across a new element in the JSON file it won’t abort the process. No. It will handle this event gracefully. The information is logged and an updated target schema can be generated to include the new element.

The original use case for Flexter is the processing of complex XML files based on industry data standards. In comparison, processing JSON files is trivial. With Flexter for JSON we are bringing all of the power of Flexter for XML to the world of JSON.

What has been your experience of working with JSON files? Reach out to us or leave a comment.